A few weeks before the first presidential debate, Dan Schultz and his colleagues on The Boston Globe’s interactive team were trying to figure out what they could build that would make the newspaper’s coverage stand out.

“Many of the ideas involved being able to know what the candidates were actually saying,” said Schultz, a current Knight-Mozilla Fellow.

Even ideas that weren’t focused on the candidates, like analyzing how viewers responded, would have benefited from knowing what was being said in real time. “It is one thing to have a graph of how you and your friends felt over time,” Schultz said, “but it is entirely different to have a data set of the phrases and words that triggered those feelings.”

“Once you have this parseable stream related to video, you can start to build these contextual apps on top of that video stream.”

But figuring out what is being said on your TV right now (not yesterday, or an hour ago) is hard. Or at least, it’s hard for a computer. There are archives of C-SPAN out there, like Metavid, but there wasn’t a simple way to get text out of video in real time.

Schultz — who you may remember from another of his projects, Truth Goggles — has actually been hacking on this problem for a while. Before coming to the Globe, he built a prototype of a personalized TV news service called ATTN-SPAN, which culls important (to you) speeches and transcripts from C-SPAN. “I really needed instant, real-time information,” Schultz said, “so I decided that it would be easy enough to just build it myself.”

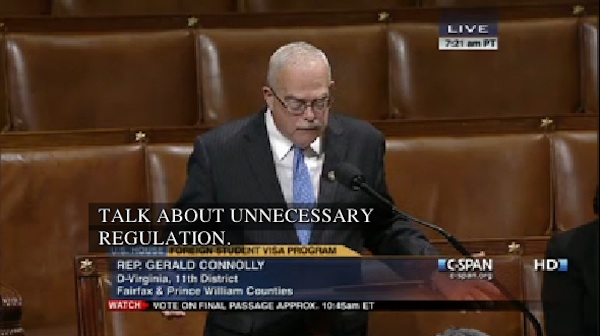

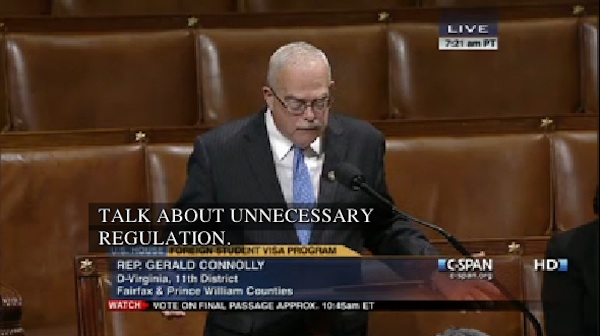

The result: Opened Captions. It provides a real-time API for closed captions pulled from C-SPAN. The system makes it possible to code against what’s being said on TV right now, and by solving this one really tricky problem, it makes a broad range of applications possible.

Getting a live transcript requires three things:

Opened Captions runs on a Mac mini connected to a DirecTV feed in Globe Lab, the newspaper’s skunkworks.

“When the Lab started, we were like, ‘We need a video feed in here,’ and we didn’t really know why,” Chris Marstall, who runs the Lab, told me. “But this really fits that perfectly.”

Between the video feed and the Mac mini is TextGrabber, a $300 converter (literally a black box) that extracts closed captions and feeds text over a serial port. The Mac mini runs an instance of the Opened Captions server, a Node.js application that pushes captions to a web server at MIT. If you connect to the Opened Captions website, you’re hitting that server at MIT, which acts as a proxy.

Between the video feed and the Mac mini is TextGrabber, a $300 converter (literally a black box) that extracts closed captions and feeds text over a serial port. The Mac mini runs an instance of the Opened Captions server, a Node.js application that pushes captions to a web server at MIT. If you connect to the Opened Captions website, you’re hitting that server at MIT, which acts as a proxy.

The beauty of this system is that, in theory, the captions feed could come from anywhere. Schultz’s grand vision for Opened Captions involves lots of inputs transcribing lots of channels. CNN, for example, could start feeding its own broadcast into Opened Captions (or it could run its own captions server).

“Once you have this parseable stream related to video, you can start to build these contextual apps on top of that video stream,” Marstall said. (To see one idea, see the bottom of this article.)

The first thing Schultz built with Opened Captions? A drinking game where the candidates get drunk with you. During the second presidential debate, Schultz debuted both Opened Captions itself and its inebriated cousin: DrunkSAPN (the joke being that the app is too drunk to spell its own name right), a live transcript that counts drinks using keywords and adds drunkenness in the form of hiccups, misspelled words, and vulgarity. Leave the app running for a while, and you end up with something like this (contrast with the Congressional Record):

When i seeerved inhic the conhic*gress ni my first otur fo serviccc,e in the 1980’s, actually from 1979 to 1989, january of 1979 to jjjanuayr of 1989, i for several yearsss was a member of teh house intelligence committee. At thta*hic time the phrase homeland security or hte word homeland was never uttereeed. If you had uttered ti then it would be

haev a foreing sense to it. Protect theee homeland, wasn’t that what hitlre was talking about?

The next app was newsier. At a hackathon before the last debate, Alvin Chang, Jin Dai, and Matt Carroll built CardText, which added context and stories from the Globe to a live-updating debate transcript.

“A lot of times, it’s going to so fast it’s difficult to see exactly what they’re saying and put it into context,” Carroll told me during the hackathon. “It’s hard to parse that stuff out when they’re throwing out 20 facts in 20 seconds.”

With the election over, Schultz and the Globe are figuring out what to do with Opened Captions. (Also, Schultz’s Knight-Mozilla Fellowship ends in April.) The current setup is cheap to maintain, though Schultz calls it “fragile.” He’s the only one maintaining it, and he’s had to drive up to Boston from his home in Providence on a few weekends to restart the server, since the Globe doesn’t officially support Node.js. Intellectual property could also be an issue. C-SPAN allows non-commercial use of its video streams, but closed captions are something of a gray area (and the Globe obviously isn’t non-commercial).

The Globe could continue to be the official home and provider of Opened Captions, or Schultz could take it with him when he leaves and try to find funding to operate it independently. “If I were to apply for a grant right now, I would ask for $10,000,” Schultz said. That would be enough to add servers to increase stability, support additional content streams, and purchase more TextGrabbers. “And that would leave plenty of wiggle room for adding scalability as needed,” Schultz added.

For now, the Mac Mini is safe in Globe Lab. “It wouldn’t be too hard to keep it running in its current state. It’s kind of a perfect project on a lot of levels for the Lab,” Marstall said. “We do a lot of projects that are experimental or are an investigation of a new platform. We don’t know what the product is going to be but we want to stand up a hack on a new platform.”

Here’s an example of what Opened Captions can do: A quick visualization of the most frequently spoken words on C-SPAN since you started reading this story.