Did you know that wearing a helmet when riding a bike may be bad for you? Or that it’s possible to infer the rise of kidnappings in Nigeria from news reports? Or that we can predict the year when a majority of Americans will reject the death penalty (hint: 2044)? Or that it’s possible to see that healthcare prices in the U.S. are “insane” in 15 simple charts? Or that the 2015 El Niño event may increase the percentage of Americans who accept climate change as a reality?

If you answered “yes” to at least 60 percent of the questions above, I’m 95 percent certain that you’ve been following the recent buzz around “data” and “explanatory” journalism. I’m talking about websites like Nate Silver’s FiveThirtyEight and Ezra Klein’s Vox.com. A few traditional news organizations have followed suit, either creating their own in-house operations (The New York Times’ The Upshot) or strengthening existing efforts.

There is a lot to praise in what all those ventures — and others that will appear in the future — are trying to achieve. Journalists are known for being allergic to math and to the scientific method; some even proudly boast about it. Many in our profession still stick to flawed practices, such as asking the same questions to two or more sources and then just reporting their answers, without weighing the evidence and then pointing out which opinion is better grounded.

At first, the current popularity of the new wave of data journalism seemed to be a good antidote to the epidemic of hardball punditry and tomfriedmanism that has plagued the news for ages. When Silver published FiveThirtyEight’s foundational manifesto, “What the Fox Knows,” I applauded him with enthusiasm. After all, bad data is pervasive in traditional newsrooms. If you think I’m exaggerating, read this recent and infuriating Washington Post op-ed, which gets causality wrong, is oblivious of ecological fallacies, misinterprets sources, and ends with a coarse, insulting, and condescending line. Don’t blame just the authors. Blame the editors at the Post, too.

But I have to confess my disappointment with the new wave of data journalism — at least for now. All the questions in the first paragraph are malarkey. Those stories may not be representative of everything that FiveThirtyEight, Vox, or The Upshot are publishing — I haven’t gathered a proper sample — but they suggest that, when you pay close attention at what they do, it’s possible to notice worrying cracks that may undermine their own core principles.

So what’s wrong with the stories in the first paragraph?

First, the piece on bike helmets is an example of cherry-picking and carelessly connecting studies to support an idea. It’s possible to “prove” almost anything if you act like this. I can “prove” that vaccines cause autism — they don’t — just by selecting certain papers, particularly those based on tiny samples or simple correlations, while ignoring the crushing majority that refutes my intuitions. Gladwellism— deriving grand theories from a handful of undersubstantiated studies — may be popular nowadays, but it’s still dubious journalism.

Second, using news reports on kidnappings in Nigeria as a proxy variable of actual kidnappings is risky. Proxy variables need to be handled with care. You cannot assert that there are more kidnappings just because the media is running more stories about them. It might be that you’re seeing more stories simply because news publications are increasingly interested in this beat. You won’t know until you do some proper analysis.

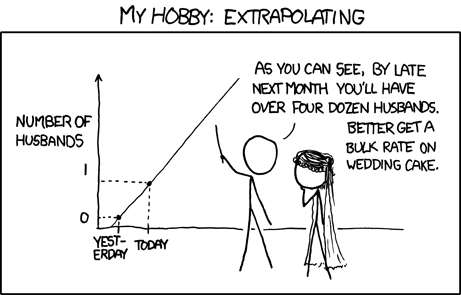

Third, long-term linear predictions of nonlinear phenomena are nearly always wrong. Writing that a majority of Americans will reject the death penalty by 2044 “if the trend continues” yields a catchy, SEO-friendly headline, but it means very little. The article says that there’s no “reason to expect the trend to pick up speed anytime soon,” but the same could have been said about support for gay rights a decade ago, and see where we are today. Unknown unknowns, confounding variables, and black swans can kill any simplistic prediction. As xkcd explained, you cannot predict that a woman will have four dozen husbands next month just because she was unmarried yesterday (zero husbands) and married today (one husband.) That’s not just bad Math. It’s lack of common sense.

Fourth, will the 2015 El Niño event make more Americans accept that climate change is real? No idea. It could be. Or not. It’s impossible to know, as that piece by The Upshot reads like wishful thinking. It does say that “belief in warming jumps when global temperatures hit record highs; it drops in cooler years,” but the evidence to support that claim is not fully revealed, so we don’t know if those “jumps” are relevant, significant, or just pure noise. Why should I trust the writer? I’m a journalist myself. I don’t trust journalists.

Fifth, can we really assert that health care prices in the U.S. are “insane” based on 15 simple charts? I won’t bore you this time, as there’s a lot of fishy details in those graphics. If you’re interested in the nitty gritty, read this blog post. I bet you’ll be as shocked as I was.

Is data journalism in crisis (already)?

The main challenge that FiveThirtyEight and Vox (and, to a lesser extent, The Upshot) face is that they overpromised before they were launched but underdelivered after they went public. They promised journalism based on a rigorous pondering of facts and data, but they have offered some stories based on flimsy evidence — with a consequent pushback from readers. As I’m one of those readers, I will take the liberty to offer some suggestions:

It’s hard to produce a constant stream of good data journalism on the cheap and with a small team. If history can be considered as a guidance, we should remember that this is not how data journalism was done in the past.

Journalism that takes advantage of quantitative methods and visualization is not new. As the Columbia Journalism Review wrote a while ago, “[Nate] Silver’s work is arguably less revolution than evolution, one facet of a journalistic practice that has actually been around for decades.”

True. In 1973, Philip Meyer, now a retired professor at the University of North Carolina, coined the term “precision journalism” in a book with that same title. Meyer is the most popular (but not the only) advocate for a methodical application of social science to the practice of journalism, and one of the founding parents of computer-assisted reporting.

To give you an example of the power of this kind of journalism: In 1993, The Miami Herald won a Pulitzer for an investigation about why Hurricane Andrew caused such a sweeping destruction in certain neighborhoods of Miami and Homestead, while leaving others almost intact. It was related to lax zoning inspection and building quality standards. The investigation was based on databases and mapping, but also on careful on-the-ground reporting.

Data-savvy investigative reporters and visual designers at established news organizations haven’t historically worked in complete isolation. They can rely on relatively large infrastructures to provide funding, support, and legal aid, when needed. It remains to be seen if a publication that conducts just this type of journalism can survive on its own. This leads to my next point.

Both Vox and FiveThirtyEight are publishing new stories, blog posts, and explanatory pieces nonstop. As any news organization nowadays, they live in a 24/7 world. They need to feed the goat, as their business models seem to be based on attracting adequately large audiences.

There’s a big risk in that. It’s much easier to feed the goat with stories with titles like “The New York Times Editorial Shakeup As Explained By ‘Game Of Thrones'” than with thorough analyses of population trends in Ukraine. (I’ll admit I found both quite enticing, though.) It is tempting for a news startup to try to be both BuzzFeed and The Economist at the same time, no matter how chimerical that goal is. Lighthearted blahblah can be done quickly and nonchalantly. Proper analytical journalism can’t. If you have a small organization, you may have to choose between producing a lot of bad stuff or publishing just a small amount of excellent stories.

Here’s the description for a perfect storm: Data journalism is often based on publicly available databases. Besides, current standards of journalism transparency dictate that, after a journalist writes or visualizes stories based on data, she should disclose her sources and make her spreadsheets downloadable for anyone to check. Finally, due to the very nature of those stories, a good portion of the audience will likely be quite numerate. This helps explain some recent takedowns in social media. Plenty of readers out there know much more than we do about our own data, and nowadays they have the means to broadcast their outrage. They don’t hesitate to do it.

Silver likes to quote Archilochus’ phrase about foxes and hedgehogs: “The fox knows many things, but the hedgehog knows one big thing.” Hedgehog intellectuals see the world through the lens of expertise on a single area, or of a single grand idea; fox thinkers borrow tools from many fields. Journalists tend to be foxes: We have a basic understanding of different disciplines, but we don’t necessarily specialize in any of them.

This is good on one hand, as it allows for richer and more lively reporting. But it also poses problems for data journalists, as you can’t really extract meaning from data using only cookie-cutter templates. No matter how great you are at analyzing stuff with the R statistics language, you’ll be in trouble if you don’t have a deep understanding of where the data came from, of how they were gathered, filtered, and processed, of their strengths and shortcomings.

That’s the reason why, in many universities, science departments teach their own statistics courses: It’s not the same to use stats for sociological observations as for genetics, psychology, astronomy, or physics. The equations may be similar, but the outcomes of your analyses don’t depend only on those equations. Context matters.

Foxes need to partner up with hedgehogs, journalists with specialists. And it’s not just a matter of asking a couple of researchers some questions while you write a blog post — something that, by the way, neither Vox or FiveThirtyEight do often enough (therefore the lack of quoted experts in many of their stories.) It’s also a matter of doing your reporting in collaboration with those researchers, as they’re the ones that know the data really well. This isn’t a particularly groundbreaking notion: The publications who regularly conduct solid data and investigative journalism nowadays, like ProPublica, work this way on a regular basis. Their journalists are very conscious of the limits of their knowledge.

(Side note: It is dubious that Nate Silver himself is a pure fox. His biggest successes when he was still at The New York Times depended on his familiarity with sports and election data. When it comes to those topics, he’s a werehedgehog, so to speak.)

Most novel technological concepts or tools follow a Gartner Hype Curve: First, the novelty is released and enjoys a peak of inflated expectations. Then it suffers through a valley of disillusionment. After that, when the hype vanishes, it reaches maturity and is widely adopted.

Even if data journalism is by no means a new phenomenon, it has entered the mainstream quite recently, breezed over the peak of inflated expectations, and precipitously sank into a valley of gloom. Hopefully, it’ll soon enter the last phase, one of stability and productivity. There’s a need for a journalism which is more rigorous and scientific. Data skills shouldn’t be the turf of a small guild of savants — they should permeate journalism in general. Data and explanatory news organizations can help achieve those goals.

Therefore, pundits and math-challenged journalists (like the folks at The New Republic, who seem to know as much about stats as I do about quantum mechanics, but feel entitled to write about it anyway) shouldn’t feel triumphant after reading this column. First, because Silver’s manifesto is still a great read. The news data and explanatory journalism organizations just need to be up to their own standards to thrive — something that I honestly hope will happen soon.

Second, because it’s a good thing to be reminded of Fred Mosteller’s famous assertion: “It is easy to lie with statistics, but easier to lie without them.” Or Richard Feynman’s: “The first principle is that you must not fool yourself, and you are the easiest person to fool.” Indeed. Particularly when you don’t know how to weigh qualitative and quantitative evidence.