The growing stream of reporting on and data about fake news, misinformation, partisan content, and news literacy is hard to keep up with. This weekly roundup offers the highlights of what you might have missed.

“Instagram is a major distributor and re-distributor of IRA propaganda that’s at the very least on par with Twitter.” Jonathan Albright, the research director at the Tow Center for Digital Journalism, previously, alarmingly showed how Russia-controlled Facebook accounts spread election-related propaganda in the U.S. — receiving hundreds of millions of shares. (Facebook then scrubbed the data that Albright had looked at.) Now Albright is back with new research showing the role that Instagram has played in spreading political propaganda over the last two years, via (now-closed, but the memes live on) Russia-controlled accounts with handles like @blacktivistt_ and @blackmattersus. He writes in a Medium post:

I argue here that Instagram is more pervasive than Twitter for political meme-spreading as well as viral outrage video-based behavioral re-targeting. Part of the reason for this is because it uses the same range of Facebook’s universe of sophisticated ad targeting infrastructure — including Lookalike and Custom Audiences. The Instagram platform can even link even video views directly to direct response and campaign objectives.

Instagram is also a major re-distributor of [Internet Research Agency] memes: Two unofficial third-party “re-sharing” apps on Instagram have circulated and pushed IRA content far beyond the realm of Instagram and Facebook, and embedded it all over the internet. This includes cross-posting of memes and posts from removed accounts from Instagram back into Facebook, Instagram, and also into Twitter. These apps also helped the memes get over to Pinterest.

All of the accounts I’ve studied here have been removed, so the fact that much of their content is still lingering is a critical concern, since as far I can tell, this creates a “zombie account” situation. Since this content, mentions, and links actually didn’t disappear when the original profiles were taken down, the true reach of the IRA content has yet to be uncovered. It’s likely that much of it has been missed in the audience reach and impact estimates.

Further, even though the accounts have been removed, third-party, unofficial “reposting” apps like Regrann and Repost “have further spread the easily quantifiable reach of the IRA Instagram posts.” (Albright shows how to search for these memes on Google, Facebook, and Twitter.) “The fact is, third-party Instagram apps mean that propaganda is still everywhere and that tells me that’s it clearly not been detected,” he writes. “There’s an entire galaxy of content that has been left out of the equation.”

Google will provide more info about publishers (sort of?) Google introduced new publisher “Knowledge Panels” that will “show the topics the publisher commonly covers, major awards the publisher has won, and claims the publisher has made that have been reviewed by third parties. These additions provide key pieces of information to help you understand the tone, expertise and history of the publisher.”

More on those “reviewed claims”: “This shows up when a significant amount of a publisher’s recent content has been reviewed by an authoritative fact-checker”; it’s based on the Fact Check markup Google introduced last year.

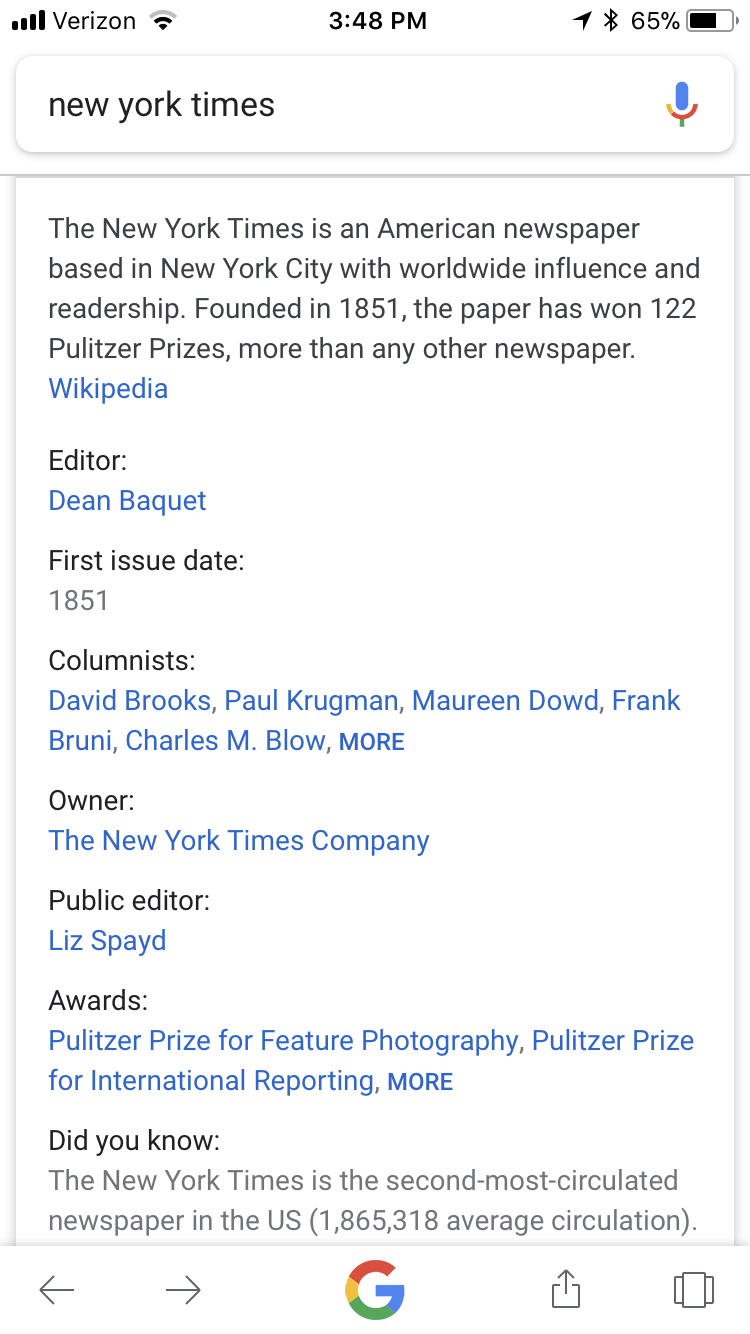

I had difficulty actually finding the knowledge panels. On desktop, they appear in a right-hand column. On mobile, you’ll find them after scrolling past a number of other results. Here’s The New York Times, for instance. (The Times no longer has a public editor, btw.)

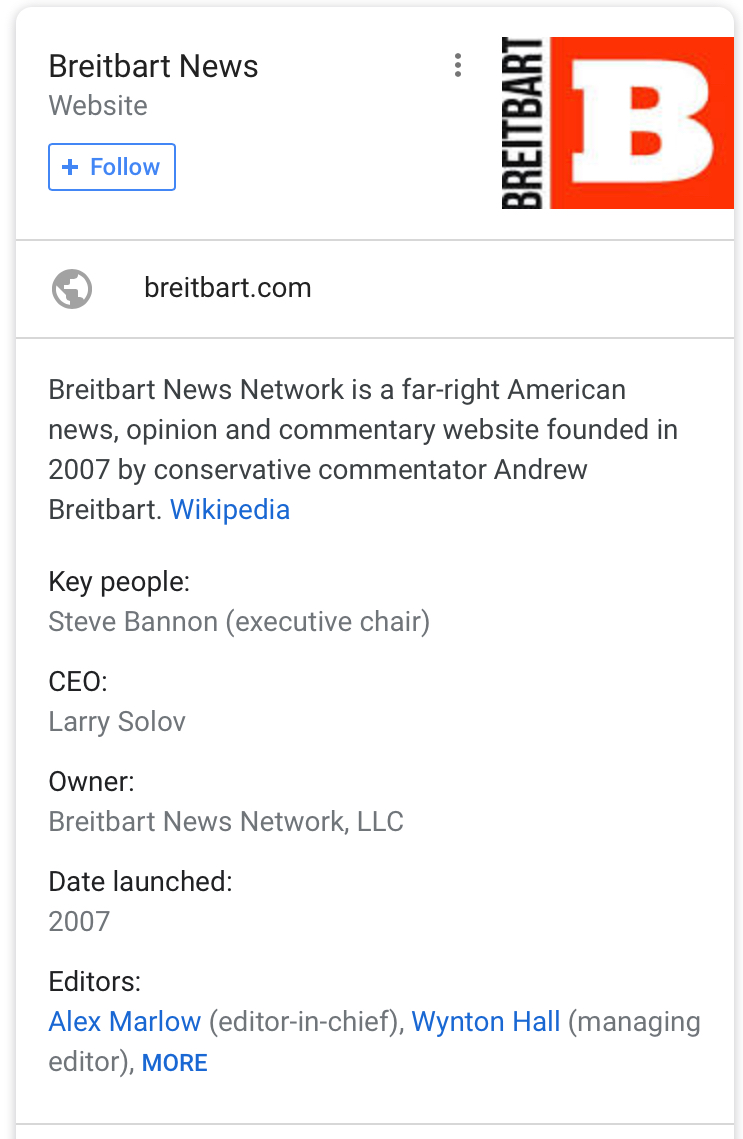

Here’s Breitbart.

Infowars doesn’t have a publisher “knowledge panel” at all. You just see other Infowars results if you search for it. We learned Wednesday that Infowars has been republishing Russian state-sponsored broadcaster RT’s articles to its site for three years without permission. 1,014 RT articles! And not just RT, actually:

This story has been updated to include other outlets. https://t.co/4ljuCz3MtH pic.twitter.com/F57mragGNT

— Jane Lytvynenko (@JaneLytv) November 8, 2017

Might as well search your byline and see.

Meanwhile, Google’s Twitter module promoted misinformation in the wake of the Texas church shooting on Sunday, something its YouTube and “top stories” modules have also done during previous breaking news events.

Google's 'Popular On Twitter' news feature is a misinformation gutter. Search for Devin Patrick Kelley just now surfaced these four items. pic.twitter.com/06rcPOgx5b

— Justin Hendrix (@justinhendrix) November 6, 2017

“We weren’t happy that those tweets that people were pointing out to us were showing up that way,” Google’s Danny Sullivan (formerly of Search Engine Land) told Gizmodo’s Tom McKay. “We’re like, okay, we may need to make some changes here.”

“Misinformation is most dangerous when it causes people to change their behavior.” Vox’s Sean Illing interviews Emily Thorson, an editor (with Brian Southwell and Laura Sheble) of the forthcoming Misinformation and Mass Audiences (University of Texas Press, January 2018), about her findings. Thorson is an assistant professor of political science at Syracuse University. I liked this part of the interview, in which Thorson says:

Misinformation is most dangerous when it causes people to change their behavior. This is why medical misinformation is so concerning — it has direct effects on behavior. With political misinformation, the connection is much weaker, and that’s because preexisting political beliefs are so strong that almost no piece of information — true or false — will change how we vote. Our partisanship even shapes whether we are exposed to that misinformation in the first place.

So I would argue that political misinformation is most dangerous not in high-profile partisan races, but when the misinformation is about policies and issues where people don’t have strong preexisting opinions. To give a real-world example, if misinformation about voter ID laws causes people to stay home from the polls, that is a serious problem. That’s the kind of thing we should be worried about.

“Digital propaganda campaigns have spread from high-profile national elections to local races.” It’s not just the presidential election: GeekWire’s Monica Nickelsburg writes about how “bots and other suspicious social media accounts were used in an apparent attempt to influence voters in a heavily funded Washington state Senate race…advancing attacks against Manka Dhingra, a Democrat running against Republican Jinyoung Englund in Washington’s 45th District.”

Dhingra appears to have won by double digits (though the official vote count could take days), meaning the “Great Blue Wall” is back in place on the West Coast.