an initiative three years in the making (but feeling oh-so-relevant right about now) that brings together news outlets such as The Washington Post, The Economist, and the Globe and Mail, as well as Facebook, Google, Twitter, and Bing, in a commitment to “provide clarity on the [news organizations’] ethics and other standards, the journalists’ backgrounds, and how they do their work.” The project will standardize this method of increased clarity so that news organizations, large and small, around the world can use it, and so that the algorithms of the tech giants can find and incorporate it.

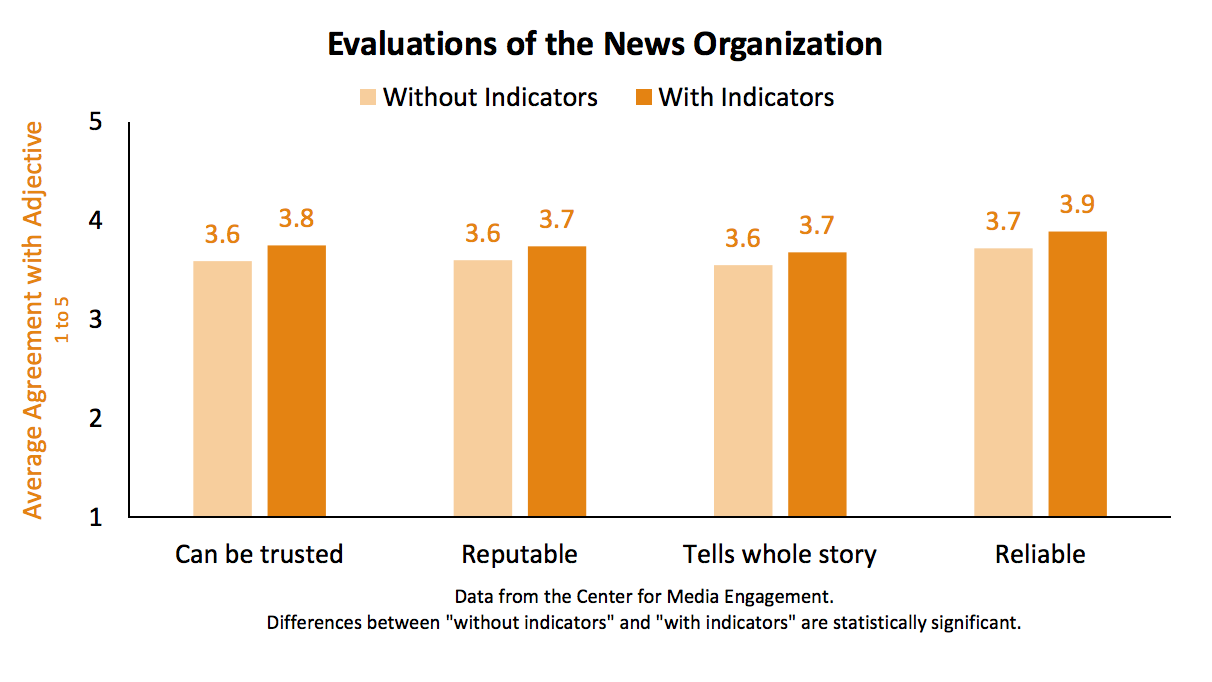

When The Trust Project launched, research by the Center for Media Engagement at the University of Texas was underway to test consumers’ reactions to “trust indicators,” and on Tuesday that research was released. The survey of 1,183 U.S. adults showed that when trust indicators — such as a reporter’s picture and job title, footnotes to source material, or a “behind the story” section explaining why an article was written — were present on articles on a fictitious news site, study participants responded favorably:

— Evaluations of the news organization were higher when indicators were present.

— Evaluations of the reporter who wrote the article were higher when indicators were present.

— Audience intentions to seek out more news from the news organization and visit with others about the article increased with the presence of the trust indicators.

— The most frequently noticed indicators were the description of why the story was written (noticed by 44 percent) and information about the Trust Project (noticed by 43 percent).

— A majority of participants thought that noting a news organization was participating in the Trust Project would increase their trust (63 percent), as would including a “Behind the Story” section (59 percent thought it would increase trust) and providing a list of best practices such as ethics, diversity and corrections policies (53 percent).

The differences weren’t large, though. And while survey respondents were presented with 15 sets of adjectives to describe the (fictitious) news outlet, statistical tests were only significant for 4 of the adjective sets: “can be trusted/can’t be trusted,” “reputable/not reputable,” “tells whole story/does not tell whole story,” and “reliable/not reliable.”

(The other 11 adjective sets were “enjoyable/unenjoyable, interesting/uninteresting, informative/uninformative, clear/confusing, credible/note credible, accurate/inaccurate, easy to navigate/difficult to navigate, not biased/biased, fair/unfair, has integrity/does not have integrity, and does not have an agenda/has an agenda.” Respondents’ assessments of these didn’t change whether or not trust indicators were present.)

The authors of the report, Alex Curry and Natalie Jomini Stroud, acknowledge:

As with any study, this one is not without its limitations. As we already noted, we don’t know which indicator, or combination of indicators, was responsible for the observed effects. Future research will have to disentangle their effects. More importantly, we note that the results are for a mock news site that was unknown to the study participants. It’s not clear how people will respond to the trust indicators on more familiar news sites. It is important to note, however, that audiences don’t always pay attention to the media brand when consuming news. The results here, however, demonstrate that Trust Indicators can affect what people think about the news.

It seems…unlikely that the presence of trust indicators on The Washington Post’s website are going to change the minds of anyone who attacks it as the Amazon Washington Post and calls it fake news. But it’s a start. And, speaking of the Post, it launched a “How to be a journalist” video series last week that aims to shed light on the reporting process and teach readers more about what journalists actually do.