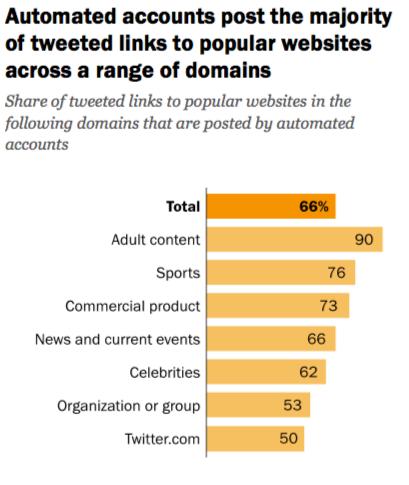

Certain types of websites were much more popular with the bots than others: Around 90 percent of the tweeted links to “adult content” sites came from what were likely bot accounts in the sample of tweets Pew looked at.

76 percent of tweeted links to popular sports sites came from bots (though don’t take these percentages as some sort of proxy for how many real humans actually saw or engaged with these bot-generated tweets).

Pew’s report analyzed a sample of 1.2 million tweets containing links that went out between July 27 and September 11, 2017, categorizing 2,315 of the most-linked to English-language websites by topic. To help determine whether the Twitter account tweeting out a link was likely a bot or not, Pew relied on Botometer, an automated posting detection tool from researchers at the University of Southern California and the Center for Complex Networks and Systems Research at Indiana University.

Researchers also found that a few hundred extremely active bots dominated the share of tweets linking out to news sites:

This analysis finds that the 500 most-active suspected bot accounts are responsible for 22 percent of tweeted links to popular news and current events sites over the period in which this study was conducted. By comparison, the 500 most-active human users are responsible for a much smaller share (an estimated 6 percent) of tweeted links to these outlets.

But these automated posters on Twitter did not appear to be primarily pushing one political leaning or another:

Suspected bots share roughly 41 percent of links to political sites shared primarily by liberals and 44 percent of links to political sites shared primarily by conservatives — a difference that is not statistically significant. By contrast, suspected bots share 57 percent to 66 percent of links from news and current events sites shared primarily by an ideologically mixed or centrist human audience.

Bots can pollute discourse online around divisive issues. Bots can also be useful. And bots are common. In an investigation into the shady practices of buying popularity on social networks like Twitter, The New York Times identified at least 3.5 million of them. Researchers suggest that as many as 15 percent of all Twitter accounts appear to be behaving like bots. Pew’s analysis wasn’t about fact-checking tweets or rooting out foreign government interference, so “we can’t say from this study whether the content shared by automated accounts is truthful information or not, or the extent to which users interact with content shared by suspected bots,” Stefan Wojcik, the study’s lead author, said.

Twitter on its end has been cracking down a bit more on bad and spammy activity. A purge in February set off alarm bells from many loud voices in the pro-Trump and far-right crowd. In its study of the prevalence of automated posting in the Twittersphere, Pew also found that 7,226 of the accounts included in its 2017 analysis were later suspended by Twitter, 5,592 of which were bots (accounts with automated posting were 4.6 times more likely to be suspended than accounts from real people).

Most bots are not openly identified as such, and Botometer isn’t 100 percent accurate, so for the purposes of Pew’s research, the bots identified in its analysis are all “suspected bots.”

“I want to lead this off by saying that any classification system of whether something is likely bot or not is inherently going to contain some level of uncertainty and is going to produce false positives as well as false negatives,” Aaron Smith, associate director of research, told me in response to some individual criticisms of the methodology raised on Twitter after the report was published on Monday. “I’m a survey research guy by training and an analogy I sometimes use to talk about this is, if you pick any individual person out of the population, you may find someone who has views that are wildly divergent with the bulk of public opinion, but if you collect this bulk of responses using known and tested methodologies, you find something that largely conforms with observed reality, even if you have outliers and extreme cases.”

For its study, Pew conducted several separate tests in conjunction with Botometer scoring to crosscheck the bot classifications it had made, including manual classification of accounts. It also looked at findings with tweets from verified accounts — such as @realdonaldtrump — removed, and found the results didn’t significantly change.

Thanks for your comment. We chose the cut point that maximized accuracy of the Botometer score, based on validation tests using human coders. Also, when we remove 'verified' Twitter accounts, like those you flagged, results are still very similar: https://t.co/zlWp018azR

— stefan j. wojcik (@stefanjwojcik) April 9, 2018

Here’s a Pew Research video also explaining how researchers approached finding Twitter bots. You can read the full analysis and methodologies here.