The growing stream of reporting on and data about fake news, misinformation, partisan content, and news literacy is hard to keep up with. This weekly roundup offers the highlights of what you might have missed.

“Freedom of speech versus freedom of reach.” Pinterest got a positive spate of publicity Thursday as a couple different outlets reported on its policy (“which the company hasn’t previously publicly discussed but which went into effect late last year,” per The Wall Street Journal) of refusing to surface certain “polluted” terms like “vaccine” and “suicide” in search results. From The Guardian:

“We are doing our best to remove bad content, but we know that there is bad content that we haven’t gotten to yet,” explained Ifeoma Ozoma, a public policy and social impact manager at Pinterest. “We don’t want to surface that with search terms like ‘cancer cure’ or ‘suicide.’ We’re hoping that we can move from breaking the site to surfacing only good content. Until then, this is preferable.”

Pinterest also includes health misinformation images in its “hash bank,” preventing users from re-pinning anti-vaxx memes that have already been reported and taken down. (Hashing applies a unique digital identifier to images and videos; it has been more widely used to prevent the spread of child abuse images and terrorist content.)

And the company has banned all pins from certain websites.

“If there’s a website that is dedicated in whole to spreading health misinformation, we don’t want that on our platform, so we can block on the URL level,” Ozoma said.

Users simply cannot “pin” a link to StopMandatoryVaccinations.com or the “alternative health” sites Mercola.com, HealthNutNews.com or GreedMedInfo.com; if they try, they receive an error message stating: “Invalid parameters.”

The Journal describes Pinterest’s rigorous process of determining whether content violates its health misinformation guidelines, and oh boy does it sound unbelievably different from anything that Facebook or Twitter does.

Pinterest trains and uses human reviewers to make determinations about whether or not shared images on the site, called pins, violate its health-misinformation guidelines. The reviewers rely on information from the World Health Organization, the federal Centers for Disease Control and Prevention and the American Academy of Pediatrics to judge the veracity of content, the company said. Training documents for reviewers and enforcement guidelines are updated about every six months, according to the company. The process is time-intensive and expensive, in part, because the artificial-intelligence technology required to automate the process doesn’t yet exist, Ms. Ozoma said.

“The only folks who lose in this decision are ones who, if they had their way, would trigger a global health crisis,” Casey Newton wrote in his newsletter The Interface. “Here’s to Ozoma and her team for standing up to them.“

Please note: Pinterest is definitely not perfect, as this 2017 BuzzFeed piece makes clear.

Nov. 2017: @leticia and I write about health misinformation on Pinterest, which the platform says is only a minor problem https://t.co/wOw5MJR1tn

Feb. 2019: Pinterest reveals that it's banned search results for vaccines and dubious cancer cures https://t.co/puwD7woJfB

— Stephanie M. Lee (@stephaniemlee) February 21, 2019

In probably unrelated news, Pinterest filed paperwork to go public via an IPO, the Journal reported Thursday afternoon. It’s currently valued at $12 billion. That’s more than 4× the valuation of America’s publicly traded local newspaper companies (Gannett, McClatchy, Tribune Publishing, Lee, GateHouse, and Belo) combined.

Meanwhile, while Pinterest is being all responsible… BuzzFeed News’ Caroline O’Donovan (a former Nieman Lab staffer) and Logan McDonald investigated how YouTube’s algorithm leads users who perform vaccine-related searches down rabbit holes of anti-vaxx videos.

For example, last week, a YouTube search for “immunization” in a session unconnected to any personalized data or watch history produced an initial top search result for a video from Rehealthify that says vaccines help protect children from certain diseases. But YouTube’s first Up Next recommendation following that video was an anti-vaccination video called “Mom Researches Vaccines, Discovers Vaccination Horrors and Goes Vaccine Free” from Larry Cook’s channel. He is the owner of the popular anti-vaccination website StopMandatoryVaccination.com.

In BuzzFeed’s further experiments, even clicking on a pro-vaccination video the first time led you to an anti-vaccination video the next time:

In 16 searches for terms including “should i vaccinate my kids” and “are vaccines safe,” whether the top search result we clicked on was from a professional medical source (like Johns Hopkins, the Mayo Clinic, or Riley Hospital for Children) or an anti-vaccination video like “Mom Gives Compelling Reasons To Avoid Vaccination and Vaccines,” the follow-up recommendations were for anti-vaccination content 100% of the time. In almost every one of these 16 searches, the first Up Next recommendation after the initial video was either the anti-vaccination video featuring Shanna Cartmell (currently at 201,000 views) or “These Vaccines Are Not Needed and Potentially Dangerous!” from iHealthTube (106,767 views). These were typically followed by a video of anti-vaccination activist Dr. Suzanne Humphries testifying in West Virginia (currently 127,324 views).

That’s partly because of “data voids,” a concept discussed in the Guardian piece and expounded by Michael Golebiewski and danah boyd. “In the case of vaccines, the fact that scientists and doctors are not producing a steady stream of new digital content about settled science has left a void for conspiracy theorists and fraudsters to fill with fear-mongering propaganda and misinformation,” The Guardian’s Julia Carrie Wong writes. There just aren’t very many pro-vaccine viral videos.

Last week, I wrote to Facebook and Google to express my concern that their sites are steering users to bad information that discourages vaccinations, undermining public health.

The search results you get for “vaccines” on Facebook are a dramatic illustration. pic.twitter.com/ZrEQfVTaRo

— Adam Schiff (@RepAdamSchiff) February 20, 2019

what pro-vax content youtube DOES have has been created almost entirely in response to anti-vax material. it's subordinate to the problem. this applies to lots of subjects on yt. it's a searchy, reccy platform — it controls incentives but it's also an expression of its library

— John Herrman (@jwherrman) February 19, 2019

Pinterest is taking harm into account when evaluating how to handle medical quackery. Pinterest in unique in that it’s mostly women. A substantial amount of health quackery targets women – antivax ads targeted to pregnant women and moms on FB, for example.

— Renee DiResta (@noUpside) February 21, 2019

The anti-vax stuff is not actually YouTube’s biggest problem this week. On Sunday, Matt Watson, a former YouTube creator, posted a video detailing how

Youtube’s recommended algorithm is facilitating pedophiles’ ability to connect with each-other, trade contact info, and link to actual CP in the comments. I can consistently get access to it from vanilla, never-before-used Youtube accounts via innocuous videos in less than ten minutes, in sometimes less than five clicks.. Additionally, I have video evidence that these videos are being monetized by Youtube, brands like McDonald’s, Lysol, Disney, Reese’s, and more.

The Verge’s Julia Alexander reported on how YouTube has repeatedly failed to stop child predation on the platform:

While individual videos are removed, the problematic users are rarely banned, leaving them free to upload more videos in the future. When Watson reported his own links to child pornography, YouTube removed the videos, but the accounts that posted the videos usually remained active. YouTube did not respond to The Verge’s question about how the trust and safety team determined which accounts were allowed to remain active and which weren’t.

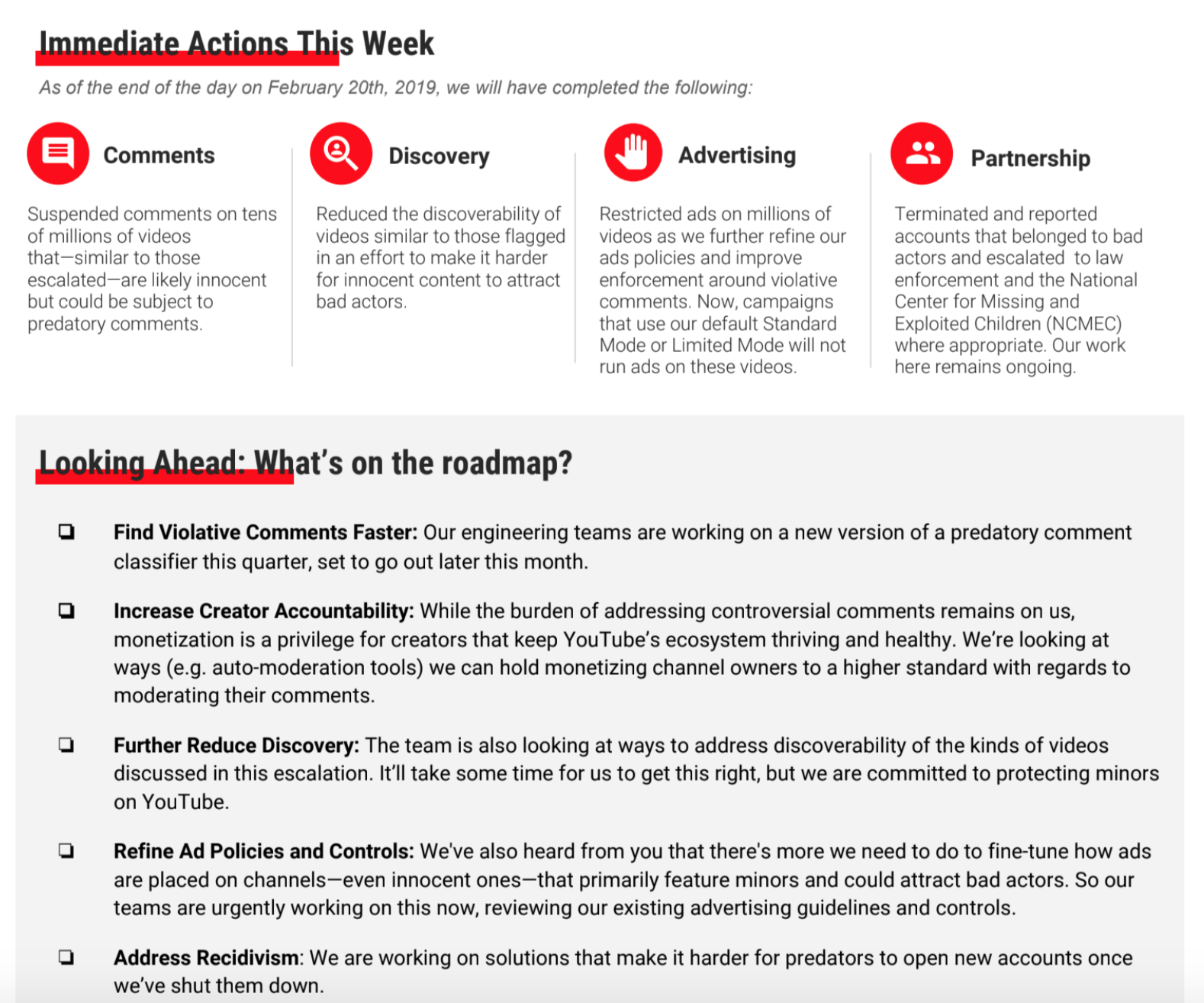

Watson’s investigation led big advertisers like Nestle, Disney, and AT&T to pull ads from YouTube this week. AdWeek has a copy of a memo that YouTube sent to major brands on Wednesday outlining actions it’s taken already and what it plans to do in the future.

How Google (YouTube’s owner) fights fake news. All of the above can be read alongside “How Google Fights Disinformation,” a white paper the company released at the Munich Security Conference February 16 that outlines some of the ways in which Google is attempting to reduce false information across its products. The paper is largely boring and vague but does make a nod to data voids:

A known strategy of propagators of disinformation is to publish a lot of content targeted on “data voids,” a term popularized by the U.S. based think tank Data and Society to describe Search queries where little high-quality content exists on the web for Google to display due to the fact that few trustworthy organizations cover them. This often applies, for instance, to niche conspiracy theories, which most serious newsrooms or academic organizations won’t make the effort to debunk. As a result, when users enter Search terms that specifically refer to these theories, ranking algorithms can only elevate links to the content that is actually available on the open web — potentially including disinformation.

We are actively exploring ways to address this issue, and others, and welcome the thoughts and feedback of researchers, policymakers, civil society, and journalists around the world.

“There is little information about how to help clinicians respond to patients’ false beliefs or misperceptions.” Researchers from the National Institutes of Health’s National Cancer Institute wrote in JAMA in December about coordinating a response to health-related misinformation. They have far more questions than answers — among the knowledge gaps:

What’s the best way for doctors to respond to “patients’ false beliefs or misperceptions”? What is the right timing for “public health communicators” to intervene “when a health topic becomes misdirected by discourse characterized by falsehoods that are inconsistent with evidence-based medicine”? What are the most important ways that health misinformation is shared? They write:

Research is needed that informs the development of misinformation-related policies for health care organizations. These organizations should be prepared to use their social media presence to disseminate evidence-based information, counter misinformation, and build trust with the communities they serve.

Maybe some of those data voids can get filled.