The controversy about the human curators behind Facebook Trends has grown, since the allegations made last week by Gizmodo. Besides being a major headache for Facebook, it has helped prod a growing discussion about the power of Facebook to shape the information we see and what we take to be most important. But we continue to fail to find the right words to describe what algorithmic systems are, who generates them, and what they should do for users and for the public. We have to get this clear.

Here’s the case so far: Gizmodo says that Facebook hired human curators to decide which topics, identified by algorithms, would be listed as trending, how they should be named and summarized; one former curator alleged that his fellow curators often overlooked or suppressed conservative topics. This came too close on the heels of a report a few weeks back that Facebook employees had asked internally if the company had a responsibility to slow Donald Trump’s momentum. Angry critics have noted that Zuckerberg, Search VP Tom Stocky, and other FB execs are liberals. Facebook has vigorously disputed the allegation, saying that they have guidelines in place to insure consistency and neutrality, asserting that there’s no evidence that it happened, distributing their guidelines for how Trending topics are selected and summarized, after they were leaked, inviting conservative leaders in for a discussion, and pointing out their conservative bona fides. The Senate’s Commerce Committee, chaired by Republican Senator John Thune, issued a letter demanding answers from Facebook about it. Some wonder if the charges may have been overstated. Other Facebook news curators have spoken up, some to downplay the allegations and defend the process that was in place, others to highlight the sexist and toxic work environment they endured.

Commentators have used the controversy to express a range of broader concerns about Facebook’s power and prominence. Some argue it is unprecedented: “When a digital media network has one billion people connected to entertainment companies, news publications, brands, and each other, the right historical analogy isn’t television, or telephones, or radio, or newspapers. The right historical analogy doesn’t exist.” Others have made the case that Facebook is now as powerful as the media corporations, which have been regulated for their influence; that their power over news organizations and how they publish is growing; that they could potentially and knowingly engage in political manipulation; that they are not transparent about their choices; that they have become an information monopoly.

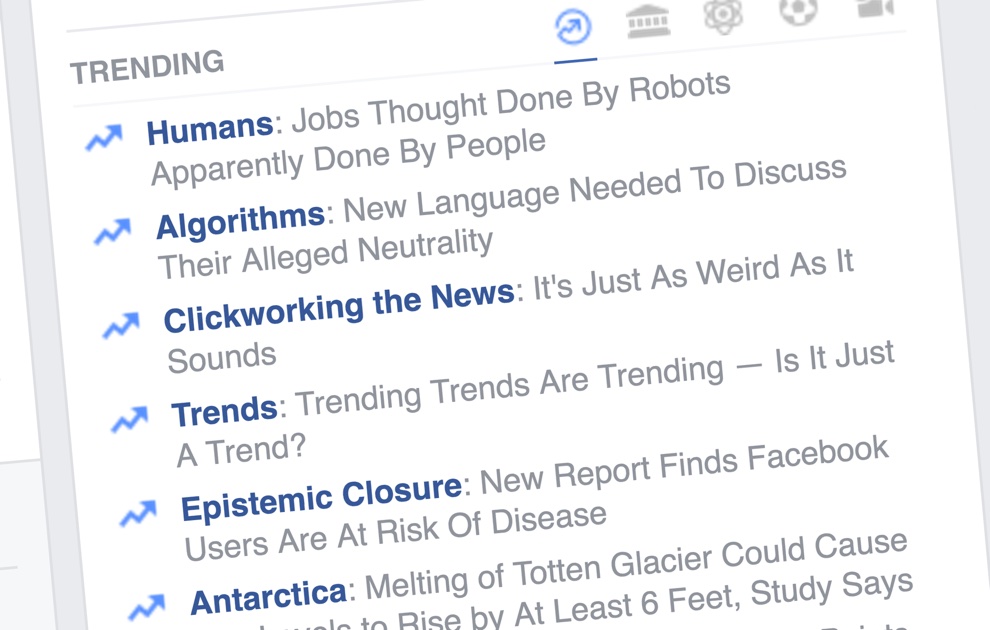

This is an important public reckoning about Facebook, and about social media platforms more generally, and it should continue. We clearly don’t yet have the language to capture the kind of power we think Facebook now holds. But it would be great if, along the way, we could finally mothball some foundational and deeply misleading assumptions about Facebook and social media platforms, assumptions that have clouded our understanding of their role and responsibility. Starting with the big one:

We prefer the idea that algorithms run on their own, free of the messy bias, subjectivity, and political aims of people. It’s a seductive and persistent myth, one Facebook has enjoyed and propagated. But its simply false.

I’ve already commented on this, and many of those who study the social implications of information technology have made this point abundantly clear (including Pasquale, Crawford, Ananny, Tufekci, boyd, Seaver, McKelvey, Sandvig, Bucher, and nearly every essay on this list). But it persists: in statements made by Facebook, in the explanations offered by journalists, even in the words of Facebook’s critics.

If you still think algorithms are neutral because they’re not people, here’s a list, not even an exhaustive one, of the human decisions that have to be made to produce something like Facebook’s Trending Topics (which, keep in mind, pales in scope and importance to Facebook’s larger algorothmic endeavor, the “news feed” listing your friends’ activity). Some are made by the engineers designing the algorithm, others are made by curators who turn the output of the algorithm into something presentable. If your eyes start to glaze over, that’s the point; read any three points and then move on, they’re enough to dispel the myth. Ready?

(determining what activity might potentially be seen as a trend)

— what data should be counted in this initial calculation of what’s being talked about (all Facebook users, or subset? English language only? private posts too, or just public ones?)

— what time frame should be used in this calculation — both for the amount of activity happening “now” (one minute, one hour, one day?) and to get a baseline measure of what’s typical (a week ago? a different day at the same time, or a different time on the same day? one point of comparison or several?)

— should Facebook emphasize novelty? longevity? recurrence? (e.g., if it has trended before, should it be easier or harder for it to trend again?)

— how much of a drop in activity is sufficient for a trending topic to die out?

— which posts actually represent a single topic (e.g., when do two hashtags referring to the same topic?)

— what other signals should be taken into account? what do they mean? (should Facebook measure posts only, or take into account likes? how heavily should they be weighed?)

— should certain contributors enjoy some privileged position in the count? (corporate partners, advertisers, high-value users? pay-for-play?)(from all possible trends, choosing which should be displayed)

— should some topics be dropped, like obscenity or hate speech?

— if so, who decides what counts as obscene or hateful enough to leave off?

— what topics should be left off because they’re too generic? (Facebook noted that it didn’t include “junk topics” that do not correlate to a real world event. What counts as junk, case by case?)(designing how trends are displayed to the users)

— who should do this work? what expertise should they have? who hires them?

— how should a trend be presented? (word? title? summary?)

— what should clicking on a trend headline lead to? (some form of activity on Facebook? some collection of relevant posts? an article off the platform, and if so, which one?)

— should trends be presented in single list, or broken into categories? if so, can the same topic appear in more than one category?

— what are the boundaries of those categories (i.e. what is or isn’t “politics”?)

— should trends be grouped regionally or not? if so, what are the boundaries of each region?

— should trends lists be personalized, or not? If so, what criteria about the user are used to make that decision?(what to do if the list is deemed to be broken or problematic in particular ways)

— who looks at this project to assess how its doing? how often, and with what power to change it?

— what counts as the list being broken, or off the mark, or failing to meet the needs of users or of Facebook?

— what is the list being judged against, to know when its off (as tested against other measures of Facebook activity? as compared to Twitter? to major news sites?)

— should they re-balance a Trends list that appears unbalanced, or leave it? (e.g. what if all the items in the list at this moment are all sports, or all celebrity scandal, or all sound “liberal”?)

— should they inject topics that aren’t trending, but seem timely and important?

— if so, according to what criteria? (news organizations? which ones? how many? US v. international? partisan vs not? online vs off?)

— should topics about Facebook itself be included?

These are all human choices. Sometimes they’re made in the design of the algorithm, sometimes around it. The result we see, a changing list of topics, is not the output of “an algorithm” by itself, but rather of an effort that combined human activity and computational analysis, together, to produce it.

So algorithms are in fact full of people and the decisions they make. When we let ourselves believe that they’re not, we let everyone — Zuckerberg, his software engineers, regulators, and the rest of us — off the hook for actually thinking out how they should work, leaving us all unprepared when they end up in the tall grass of public contention. “Any algorithm that has to make choices has criteria that are specified by its designers. And those criteria are expressions of human values. Engineers may think they are “neutral”, but long experience has shown us they are babes in the woods of politics, economics and ideology.” Calls for more public accountability, like this one from my colleague danah boyd, can only proceed once we completely jettison the idea that algorithms are neutral — and replace it with a different language that can assess the work that people and systems do together.

It is certainly in Facebook’s interest to obscure all the people involved, so users can keep believing that a computer program is fairly and faithfully hard at work. Dismantling this myth raises the kind of hard questions Facebook is fielding. But, once we jettison this myth, what’s left? It’s easy to despair that with so many human decisions involved, how could we ever get a fair and impartial measure of what matters? And forget the handful of people that designed the algorithm and the handful of people that select and summarize from it: Trends are themselves a measure of the activity of Facebook users. These trending topics aren’t produced by dozens of people but millions. Their judgment of what’s worth talking about, in each case and in the aggregate, may be so distressingly incomplete, biased, skewed, and vulnerable to manipulation, that it’s absurd to pretend it can tell us anything at all.

But political bias doesn’t come from the mere presence of people. It comes from how those people organized to do what they’re asked to do. Along with our assumption that algorithms are neutral is a matching and equally misleading assumption that people are always and irretrievably biased. But human endeavors are organized affairs, and can organized to work against bias. Journalism is full of people too, making all sorts of just as opaque, limited, and self-interested decisions. What we hope keeps journalism from slipping into bias and error is the well-established professional norms and thoughtful oversight.

The real problem here is not the liberal leanings of Facebook’s news curators. If conservative news topics were overlooked, it’s only a symptom of the underlying problem. Facebook wanted to take surges of activity that its algorithms could identify and turn them into news-like headlines. But it treated this as an information processing problem, not an editorial one. They’re “clickworking” the news.

Clickwork begins with the recognition that computers are good at some kinds of tasks, and humans others. The answer, it suggests, is to break the task at hand down into components and parcel them out to each accordingly. For Facebook’s trending topics, the algorithm is good at scanning an immense amount of data and identifying surges of activity, but not at giving those surges a name and a coherent description. That is handled by people — in industry parlance, this is the “human computation” part. These identified surges of activities are delivered to a team of curators, each one tasked with following a set of procedures to identify and summarize them. The work is segmented into simple and repetitive tasks, and governed by a set of procedures such that, even though different people are doing it, their output will look the same. In effect, the humans are given tasks that only humans can do, but they are not invited to do them in a human way: they are “programmed” by the modularized work flow and the detailed procedures so that they do the work like computers would. As Lilly Irani put it, clickwork “reorganizes digital workers to fit them both materially and symbolically within existing cultures of new media work.”

This is apparent in the guidelines that Facebook gives to their Trends curators. The documents, leaked to The Guardian then released by Facebook, did not reveal some bombshell about political manipulation, nor did they do much to demonstrate careful guidance on the part of Facebook around the issue of political bias. What’s most striking is that they are mind-numbingly banal: “Write the description up style, capitalizing the first letter of all major words…” “Do not copy another outlet’s headline…” “Avoid all spoilers for descriptions of scripted shows…” “After identifying the correct angle for a topic, click into the dropdown menu underneath the Unique Keyword fielding select the Unique Keyword that best fits the topic…” “Mark a topic as ‘National Story’ importance if it is among the 1-3 top stories of the day. We measure this by checking if it is leading at least 5 of the following 10 news websites…” “Sports games: rewrite the topic name to include both teams…” This is not the news room, it’s the secretarial pool.

Moreover, these workers were kept separate from the rest of the full-time employees, worked under quotas for how many trends to identify and summarize that were increased as the project went on. As one curator noted, “The team prioritizes scale over editorial quality and forces contractors to work under stressful conditions of meeting aggressive numbers coupled with poor scheduling and miscommunication. If a curator is underperforming, they’ll receive an email from a supervisor comparing their numbers to another curator.” All were hourly contractors, were kept under non-disclose agreements and asked not to mention that they worked for Facebook. “’It was degrading as a human being,’ said another. ‘We weren’t treated as individuals. We were treated in this robot way.’” A new piece in The Guardian from one such news curator insists that it was also a toxic work environment, especially for women. These “data janitors” are rendered so invisible in the images of Silicon Valley and how tech works that, when we suddenly hear from one, we’re surprised.

Their work was organized to quickly produce capsule descriptions of bits of information that are styled the same — as if they were produced by an algorithm. (this lines up with other concerns about the use of algorithms and clickworkers to produce cheap journalism at scale, and the increasing influence of audience metrics about what’s popular on news judgment.) It was not, however, organized to thoughtfully assemble a vital information resource that some users treat as the headlines of the day. It was not organized to help these news curators develop experience together on how to do this work well, or handle contentious topics, or reflect on the possible political biases in their choices. It was not likely to foster a sense of community and shared ambitions with Facebook, which might lead frustrated and over-worked news curators to indulge in their own political preferences. And I suspect it was not likely to funnel any insights they had about trending topics back to the designers of the algorithms they depended on.

Part of why charges of bias are so compelling is that we have a longstanding concern about the problem of bias in news. For more than a century we’ve fretted about the individual bias of reporters, the slant of news organizations, and the limits of objectivity. But is a list of trending topics a form of news? Are the concerns we have about balance and bias in the news relevant for trends?

“Trends” is a great word, the best word to have emerged amidst the social media zeitgeist. In a cultural moment obsessed with quantification, defended as being the product of an algorithm, “trends” is a powerfully and deliberately vague term that does not reveal what it measures. Commentators poke at Facebook for clearer explanations of how they choose trends, but “trends” could mean such a wide array of things, from the most activity to the most rapidly rising to a completely subjective judgment about what’s popular.

But however they are measured and curated, Facebook’s Trends are, at their core, measures of activity on the site. So, at least in principle, they are not news, they are expressions of interest. Facebook users are talking about some things, a lot, for some reason. This has little to do with “news” which implies an attention to events in the world and some judgment of importance. Of course, many things Facebook users talk about, though not all, are public events. And it seems reasonable to assume that talking about a topic represents some judgment of its importance, however minimal. Facebook takes these identifiable surges of activity as proxies for importance. Facebook users “surface” the news…approximately. The extra step and “injecting” stories drawn from the news that were for whatever reason not surging among Facebook users goes a step further, to turn their proxy of the news into a simulation of it. Clearly this was an attempt to best Twitter, may also have played into their effort to persuade news organizations to partner with them and take advantage of their platform as a means of distribution. But it also encouraged us to hold Trends accountable for news-like concerns, like liberal bias.

We could think about Trends differently, not as approximating the news but as taking the public’s pulse. If Trends were designed to strictly represent “what are Facebook users talking about a lot,” presumably there is some scientific value, or at least cultural interest, in knowing what (that many) people are actually talking about. If that were its understood value, we might still worry about the intervention of human curators and their political preferences, but not because their choices would shape users’ political knowledge or attitudes, but because e’d want this scientific glimpse to be unvarnished by misrepresentation.

But that is not how Facebook has invited us to think about its Trending topics, and it couldn’t do so if it wanted: its interest in Trending topics is neither as a form of news production nor as a pulse of the public, but as a means to keep users on the site and involved. The proof of this, and the detail that so often gets forgotten in these debates, is that the Trending Topics are personalized. Here’s Facebook’s own explanation: “Trending shows you a list of topics and hashtags that have recently spiked in popularity on Facebook. This list is personalized based on a number of factors, including Pages you’ve liked, your location and what’s trending across Facebook.” Knowing what has “spiked in popularity” is not the same as news; a list “personalized based on…Pages you’ve liked” is no longer a site-wide measure of popular activity; an injected topic is no longer just what an algorithm identified.

As I’ve said elsewhere, “trends” are not a barometer of popular activity but a hieroglyph, making provocative but oblique and fleeting claims about “us” but invariably open to interpretation. Today’s frustration with Facebook, focused for the moment on the role their news curators might have played in producing these Trends, is really a discomfort with the power Facebook seems to exert — a kind of power that’s hard to put a finger on, a kind of power that our traditional vocabulary fails to capture. But across the controversies that seem to flare again and again, a connecting thread is Facebook’s insistence on colonizing more and more components of social life (friendship, community, sharing, memory, journalism), and turning the production of shared meaning so vital to sociality into the processing of information so essential to their own aims.

This piece is crossposted from Culture Digitally and the Social Media Collective Research Blog.

Tarleton Gillespie is principal researcher at Microsoft Research New England and an adjunct associate professor in the Department of Communication and the Department of Information Science at Cornell University.