The growing stream of reporting on and data about fake news, misinformation, partisan content, and news literacy is hard to keep up with. This weekly roundup offers the highlights of what you might have missed.

“Right-leaning pages consistently earn more interactions than left-leaning or ideologically nonaligned pages.” Conservatives have long complained that their views are censored on Facebook. Republican Sen. Mike Lee of Utah said in Congressional hearings this week that fact-checking — like the labels that Facebook and Twitter attach to false posts — count as censorship: “When I use the word ‘censor’ here, I’m meaning blocked content, fact-check, or labeled content, or demonetized websites of conservative, Republican, or pro-life individuals or groups or companies.” (Censorship is the suppression of speech or other information on the grounds that it’s considered offensive or questionable. The studies below make it very clear that these stories are not being suppressed.)

it is absolutely insane that we have a U.S. Senator who believes that these things are "censorship" [let's see if you can actually hear him this time] pic.twitter.com/zXLk8a5a2O

— Timothy Burke (@bubbaprog) October 28, 2020

"I used the word 'censor' as a term of art and defined it," Lee says in response to Sundar saying "we don't censor anyone."

— nilay patel (@reckless) October 28, 2020

The idea that right-leaning content is actually censored — that people are prevented from seeing it — is “short on facts and long on feelings,” as Casey Newton has written. This week, a couple stories and studies focused showed again that conservative content outperforms liberal content on Facebook. (See also: Progressive publication Mother Jones’ recent claims, with that its traffic was throttled as Facebook tweaked its algorithm to benefit conservative sites like The Daily Wire instead.)

— Politico worked with the Institute for Strategic Dialogue, a London-based thinktank that studies extremism online, to “analyze which online voices were loudest and which messaging was most widespread around the Black Lives Matter movement and the potential for voter fraud in November’s election.” In their analysis of more than 2 million Facebook, Instagram, Twitter, Reddit, and 4Chan posts, the researchers found that

a small number of conservative users routinely outpace their liberal rivals and traditional news outlets in driving the online conversation — amplifying their impact a little more than a week before Election Day. They contradict the prevailing political rhetoric from some Republican lawmakers that conservative voices are censored online — indicating that instead, right-leaning talking points continue to shape the worldviews of millions of U.S. voters.

For instance:

At the end of August, for instance, Dan Bongino, a conservative commentator with millions of online followers, wrote on Facebook that Black Lives Matter protesters had called for the murder of police officers in Washington, D.C. Bongino’s social media posts are routinely some of the most shared content across Facebook, based on CrowdTangle’s data.

The claims — first made by a far-right publication that the Southern Poverty Law Center labeled as promoting conspiracy theories — were not representative of the actions of the Black Lives Matter movement. But Bongino’s post was shared more than 30,000 times, and received 141,000 other engagements such as comments and likes, according to CrowdTangle.

In contrast, the best-performing liberal post around Black Lives Matter — from DL Hughley, the actor — garnered less than a quarter of the Bongino post’s social media traction, based on data analyzed by Politico.

The top-performing link posts by U.S. Facebook pages in the last 24 hours are from:

1. Dios Es Bueno

2. Dan Bongino

3. Dan Bongino

4. Dan Bongino

5. Dan Bongino

6. Dan Bongino

7. Donald Trump For President

8. Fox News

9. Dan Bongino

10. David J. Harris Jr.— Facebook's Top 10 (@FacebooksTop10) October 28, 2020

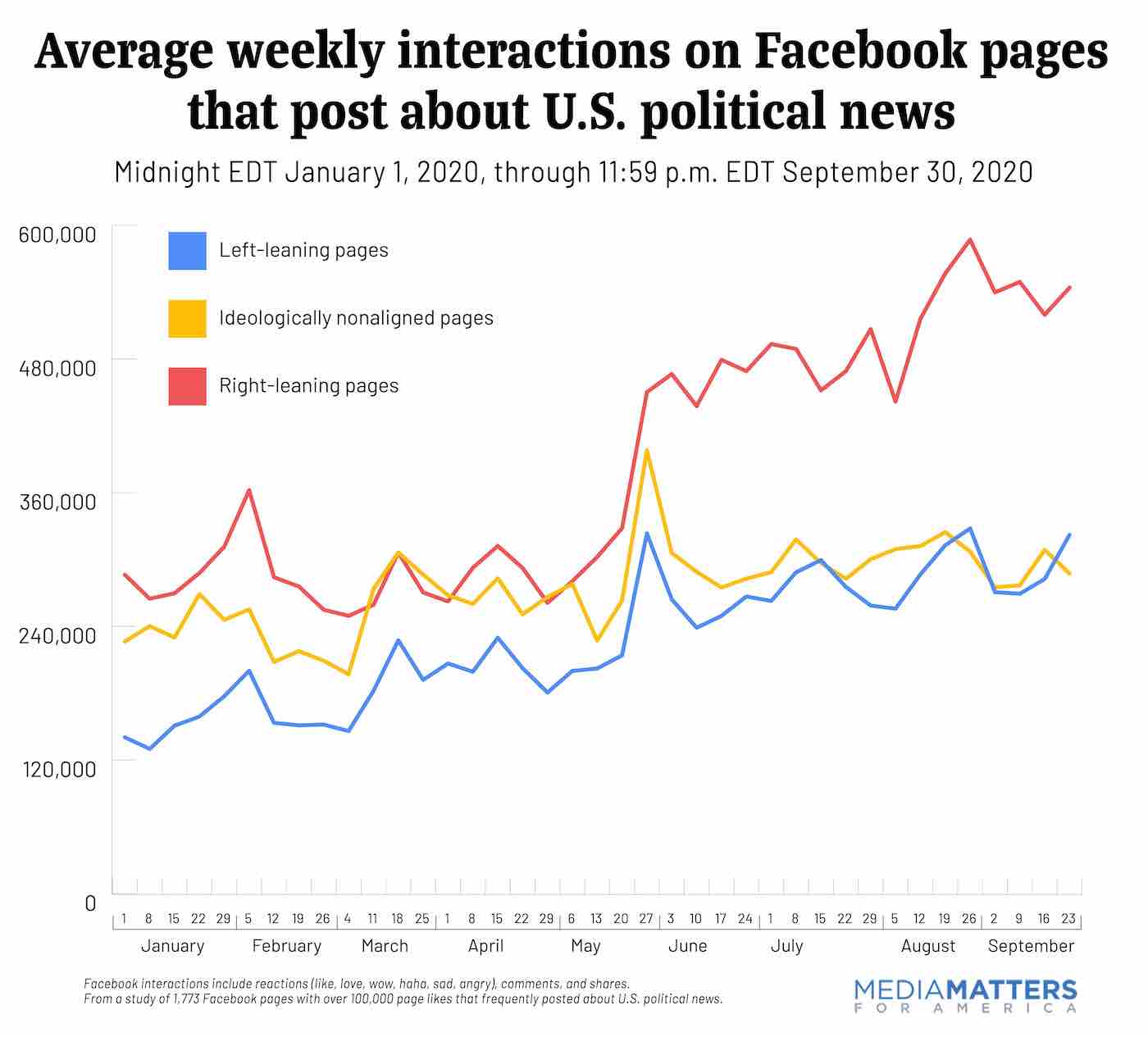

— A nine-month study by the progressive nonprofit Media Matters, using CrowdTangle data, found both that partisan content (left and right) did better than non-partisan content and that “right-leaning pages consistently earned more average weekly interactions than either left-leaning or ideologically nonaligned pages.[…] Between January 1 and September 30, right-leaning Facebook pages tallied more than 6 billion interactions (reactions, comments, shares), or 43% of total interactions earned by pages posting about American political news, despite accounting for only 26% of posts.”

Beware George Soros stories. The New York Times is working with Zignal Labs, a firm that tracks information online, to analyze which news topics in 2020 are most associated with misinformation. “The topic most likely to generate misinformation this year, according to Zignal, was an old standby: George Soros, the liberal financier who has featured prominently in right-wing conspiracy theories for years,” the Times’ Kevin Roose reports. Here’s the full list:

1. George Soros (45.7 percent misinformation mentions)

2. Ukraine (34.2 percent)

3. Vote by Mail (21.8 percent)

4. Bio Weapon (24.2 percent)

5. Antifa (19.4 percent)

6. Biden and Defund the Police (14.2 percent)

7. Hydroxychloroquine (9.2 percent)

8. Vaccine (8.2 percent)

9. Anthony Fauci (3.2 percent)

10. Masks (0.8 percent)

For the top-three subjects — George Soros, Ukraine, and vote by mail — “some of the most common spreaders of misinformation were right-wing news sites like Breitbart and The Gateway Pundit,” Roose notes. “YouTube also served as a major source of misinformation about these topics, according to Zignal.”

“Moving slowly is a Wikipedia super-power.” At Wired, Noam Cohen writes about Wikipedia’s plan to prevent election-related misinformation from making its way onto the platform.

On Wednesday, Wikipedia moved to protect its main 2020 election page, and will likely apply those safeguards to the many other articles that will need to be updated depending on the outcome of the race. The main tools for doing this are similar to the steps it has already deployed to resist disinformation about the Covid-19 pandemic: installing controls to prevent new, untested editors from even dipping a toe until well past Election Day and making sure that there are large teams of editors alerted to any and all changes to election-related articles. Wikipedia administrators will rely on a watchlist of “articles on all the elections in all the states, the congressional districts, and on a large number of names of people involved one way or another,” wrote Drmies, an administrator who helps watch over political articles.

Per Wednesday’s change, anyone editing the article about November’s election must have had a registered account for more than 30 days and already made 500 edits across the site. “I am hoping this will reduce the issue of new editors trying to change the page to what they believe to be accurate when it doesn’t meet the threshold that has been decided,” wrote Molly White, a software engineer living in Boston known on Wikipedia as GorillaWarfare, who put the order in place. The protection for that article, she wrote, was meant to keep away bad actors as well as overly exuberant editors who feel the “urge to be the ones to introduce a major fact like the winner of a presidential election.”

On Election Night, she wrote, Wikipedia is likely to impose even tighter restrictions, limiting the power to publish a winner in the presidential contest — sourced, of course, to reputable outlets like the Associated Press or big network news operations — to the most experienced, most trusted administrators on the project.