From simple labeling to links to third-party sites for accurate information to outright blocking, social media platforms continue to test different ways to keep users informed about content containing mis- and disinformation. A lot of these efforts — often, by the companies’ own admission — have proven less than fruitful.

Now, a new study that analyzed former president Donald Trump’s tweets finds that there are no clear-cut answers when it comes to determining how people engage with content that’s clearly labeled as containing misleading information. The study drew from more than 1,200 tweets from Donald Trump’s Twitter account between October 30, 2020 and January 8, 2021. The authors also collected these tweets’ engagement metrics as well as the nearly 2.4 million replies to these tweets.

At the outset, the study found that labeled tweets were much more likely to garner user engagement: Compared to unlabeled tweets, labeled tweets were liked approximately 36% more, retweeted 70% more, quote tweeted 88% more, and the median number of replies labeled tweets generated was 84% higher than that of unlabeled tweets.

“Before I was a researcher and was just a Twitter user, I thought labels would have a backlash effect,” Orestis Papakyriakopoulos, a postdoctoral researcher at Princeton and one of the authors of the study, said.

But this study’s findings are consistent with other studies, he said. In one study, researchers found that although Twitter labeled Trump’s tweets as possibly containing misinformation, these labeled tweets increased users’ engagement with those tweets. Another study found that simply blocking Twitter users from engaging with Trump tweets containing misinformation led to fewer of these tweets being shared (although they proliferated on other social media platforms). In contrast, when Twitter chose “soft moderation” — where tweets were labeled as containing disputed claims or other such marker for misinformation — those tweets from the former president were more likely to be disseminated.

The new study — published recently in the preprint repository SSRN — went further in trying to determine what kind of labeling seemed to make a difference with Twitter engagement.

To determine the different kinds of labeling, Papakyriakopoulos and his coauthor Ellen P. Goodman looked to previous work by Emily Saltz and Claire Leibowicz to determine whether the kinds of labels Twitter had applied to Trump’s tweets.“We classified tweets as soft-moderated or not, and as containing three types of misinformation: fraud-related, election victory–related, and ballots-related. We categorize warning labels by type (veracity or contextual), rebuttal strength, and their linguistic and topical overlap with the associated tweets,” Papakyriakopoulos and his co-author wrote in the study.

“Veracity labels” called out whether information within a tweet was true or false. “Contextual labels” went beyond that to provide more information about the issue. Here’s how the authors classified the other variables:

The second variable was linguistic overlap to signify that the label used the exact same vocabulary as did the tweet. For example, the variable would have the value one when the word “fraud” appeared both in the label and the tweet. The third variable was topical overlap to signify that the label and tweet referred to the same issue, but with different wording. For example, the variable would have the value one when the word “steal” appeared in Trump’s tweet, while the label referred to election “fraud.”

By analyzing these different qualities of a label and correlating them with engagement, the authors found a few things.

Contextual labels, providing more information, did not increase user engagement. While veracity labels, stating whether something was true or false, did increase engagement, that only happened when these labels were misplaced. “If Twitter placed a label on a tweet incorrectly, then engagement went up,” Papakyriakopoulos said.

At the same time, a correctly placed label did seem to change the nature of the replies, he said, in a way that primed the topic for discussion. “There weren’t more replies, but they were actually discussing the topic at hand,” Papakyriakopoulos said.

The group also evaluated whether the strength of the label’s rebuttal affected engagement. Stronger rebuttals — ones where the label went beyond just saying a claim was disputed and instead offered an outlet for people to learn more or offered what the truth was — were associated with less toxic replies.

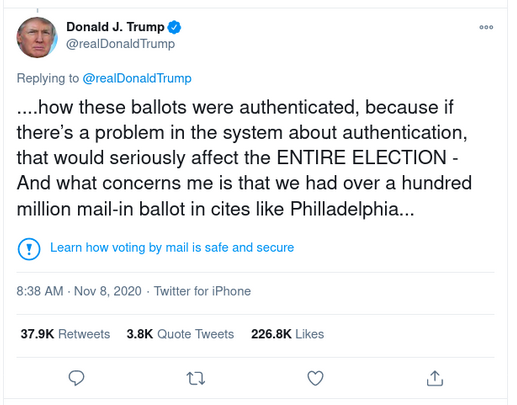

The below tweet, with a label clearly stating that voting by mail is safe and secure and offering a link to where people can learn more about it, is an example of a strong rebuttal.

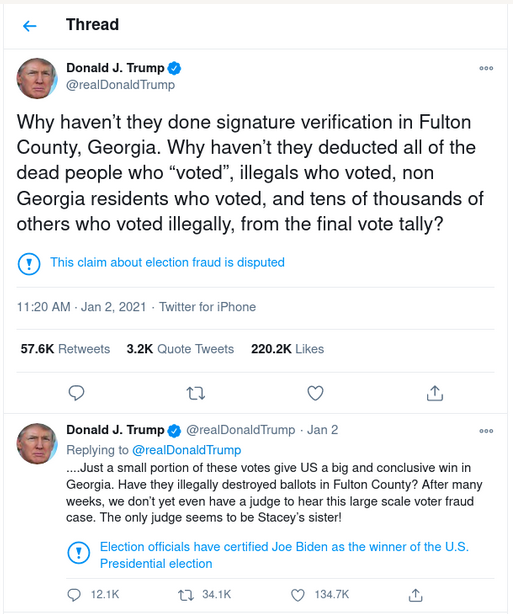

The below tweet, with a loose “This claim about election fraud is disputed” label, is a weak rebuttal. At the same time, the label in the subsequent tweet is strong: It clearly refutes Trump’s claim that he won the election.

Stronger rebuttals increased engagement from both sides of the political spectrum. The nature of the support didn’t change, however: Perhaps predictably, conservatives would support claims that Trump made more and liberals would be more vocal about being against his claims.

Was there a special “Trump effect” to these results? The findings suggest no. “Although it was Trump and I expected to see much more polarization — especially because we heard this narrative of fake news and tech platforms violating free speech — we did not find that the labels actually had this backlash,” Papakyriakopoulos said.

What does all this mean for content moderation on social media platforms, or at least on Twitter?

“Perfect moderation doesn’t exist,” Papakyriakopoulos said. To him, moderation is about getting people to discuss something constructively, especially since misinformation is always going to find a way to be present. So, he said, platforms have to decide: “How can you make people discuss [information] instead of polarizing them further?”

Twitter and other social media platforms need to think of content moderation as not only removing bad information, but also in terms of how users are present on platforms and how their opinions are shaped in real time.

It would be helpful, Papakyriakopoulos said, if Twitter and other platforms were more forthcoming about how their labeling process worked. “If they can provide more information about how they moderate content and how they did it historically, that would be really helpful,” he said. “These can be things that everybody — researchers, the platform itself, or even other platforms — can learn from.”