When newsroom budgets have shrunk, one of the line items executives find easiest to cut is newsroom training — the investment of time and resources into making your journalists better.

This isn’t a new complaint. Check out this study from 20 years ago:

U.S. journalists say a lack of training is their No. 1 source of job dissatisfaction, ahead of pay and benefits, a comprehensive national survey reveals. What’s more, the news executives they work for admit they should provide more training for their employees, but say time and insufficient budget are the main reasons they don’t…[The findings] point to a gap in perception on training; while most bosses give their news organizations A or B grades when it comes to training, half of their staffers give them grades of C, D or F.

I suspect pay and benefits have passed training as a complaint since 2002 — but nonetheless, those training budgets have done nothing but shrink in most newsrooms. And to convince your bosses that the investment is worthwhile, you’ll want to offer as much evidence as you can that there’ll be benefits to your work on the other side.

A new paper in Journalism Practice — by the University of Oregon’s Hollie Smith and David M. Markowitz and the University of Connecticut’s Christine Gilbert — tries to address that issue. They look at one specific genre of training: improving the science knowledge of reporters who write about politics. Here’s the abstract (all emphases mine):

This project analyzed the impact of a 2.5-day science training boot camp for political reporters on their use of scientific sources in published reporting.Results showed that immediately following the boot camp, most survey respondents indicated they would try to incorporate more scientific material into future stories. We used both automated text analysis and human-coded analysis to examine if actual changes in reporting behavior occurred.

Automated text analysis revealed that while journalists did not use more explanatory language overall in the 6 months following the training, they wrote with greater certainty and less tentativeness in their published articles compared to before the training. The more targeted content analysis of articles revealed that journalists had modest increases for including scientific material overall, and peer-reviewed studies and scientists’ quotes in particular.

We discuss the implications of these findings for science training, journalism, and reporting.

And there are indeed implications! How many COVID-19 stories, heavy with science-related issues, have been covered by reporters whose usual beat is politics or government? Many of them did an excellent job, of course. But we’ve seen in climate change coverage that when reporters aren’t confident about their science knowledge, it’s easy for them to fall back to a familiar framing from politics stories: One side says x, the other side says y.

That framing might be okay when the story’s about whether your town should renovate the middle school. But it’s a real problem when “one side” is 99% of climate scientists and “the other side” is ExxonMobil-funded misinformation. From the paper:

The majority of journalists today are not specialists in any particular area — over 75% of journalists in the traditional media workforce have a college degree in arts and humanities. When it comes to science journalism, Sachsman, Simon, and Myer Valentia (2008) found that only 3% of journalists have an undergraduate degree in science…Questions remain about the benefits of science training for journalists, especially in the post-specialist era of mainstream journalism and the continual evolution of the new media landscape. In studies examining how formal science education plays into science knowledge and coverage, scholars have found that while a formal education is important, informal on-the-job education is more important for most journalists. This tension between formal education and on-the-job training brings to light important questions when evaluating professional development trainings that happen once journalists are already on the job.

Not every newsroom can have its own Ed Yong, though, so offering some form of science training to non-science reporters is a popular response. This study looks at training offered by SciLine, a project of the esteemed American Association for the Advancement of Science whose “singular mission” is “enhancing the amount and quality of scientific evidence in news stories.” Specifically, it looks at a free 2019 bootcamp on Science Essentials for Political Reporters, offered over 2.5 days in Des Moines. (Political reporters like to hang out in Iowa a few months before the start of leap years, for some reason.) Here’s SciLine’s description:

Our August 2019 boot camp taught 29 political reporters from local, regional, and national news outlets across 18 states. The nonpartisan, policy-neutral curriculum was developed by SciLine over several months, based on research and interviews with political analysts, veteran journalists, and policy experts.Classroom sessions were taught by faculty from eight universities and covered: climate change basics; energy fundamentals; water quality; agriculture and the environment; the social and economic impacts of immigration; and the domestic impacts of trade policies.

Sessions on the “climate-energy nexus” and the “water-agriculture nexus” served integrative roles, a dinner keynote covered the science of surveys and polling, and a lunchtime dialogue focused on tactics for issue-based reporting on the campaign trail.

To complement coursework, SciLine organized three field trips to sites where classroom content could be observed in practice: a working corn, soy, and cattle farm; a wind turbine technician training site; and a research farm studying nutrient pollution. On the final evening of the boot camp, SciLine hosted a free, public event in the planetarium at the Science Center of Iowa featuring a discussion with three state climatologists, moderated by PBS Newshour science correspondent Miles O’Brien.

So this was top-notch training from a top-notch source, given to a group of journalists who were in a strong position to take advantage of it. (in the likely event that you weren’t one of the 29, SciLine wrote up summaries of the teaching.) Did it have an impact?

The researchers’ first measure was simple: asking reporters after the training whether they would try to incorporate more science into their stories. This was, as you would expect, not a hard thing for people to say yes to. (61.5% said they “definitely” would, 27% said they “probably” would, and 11.5% said they “might or might not.” No one was evil enough to tell SciLine “no, I will not try to add more science to my stories, suckers, I was only here for the free tour of a wind turbine technician training site.”)

Their other two measures, more meaningfully, were based on a content analysis of all the stories these political reporters produced over the next six months — a period that included both the 2020 Iowa caucuses and the earliest news about what would become COVID-19. They also gathered six months of their pre-training output as a control — 1,840 news stories in all. Researchers looked at how confident their reporting was, linguistically, after the training:

We examine how journalists internalized the trainings as revealed through their reporting on science topics. Prior evidence suggests that rates of causal terms (e.g., because, affect), certainty (e.g., absolute, definite, every), and tentativeness (e.g., guess, hope, unlikely) are indicators of verbal confidence, and expressions of confidence have positive downstream effects for how writers appraise targets.We expect that compared to articles written before the SciLine training, articles written after the SciLine training will contain more verbal confidence (e.g., more causal terms, more certainty, less tentativeness) since the trainings specifically focused on improving science literacy and comfort with science topics.

That analysis was done algorithmically, by text-analysis programs. They also had humans examine the sourcing the reporters used in these stories:

The second level used human-coded analysis to examine any specific changes in source use in published stories. In studies that examined journalistic content pre- and post-training, most have found modest results.Becker and Vlad (2006) found targeted training about public health issues did not produce any large-scale changes in coverage, but journalists did cite expert sources more often. Schiffrin and Behrman (2011) noted marginal improvement across eight scales in articles after a training in Sub-Saharan Africa. Beam, Spratt, and Lockett John (2015) found reporters who participated in a weeklong program at the Dart Center for Journalism and Trauma were more likely to use different sources after training and were more likely to humanize the experiences of trauma.

Given previous research, we expect that relative to before the training, journalists will use more scientific material and sources in their reporting.

So how’d they do?

The automated text analysis was a mixed bag. It found no notable change in how much reporters used causal language (because, affect) after the training. But it did find that their writing on science-related issues increased in certainty and decreased in tentativeness — as one might expect from people who have more confidence in their ability to write about scientific topics.

The impact on sourcing was also clear:

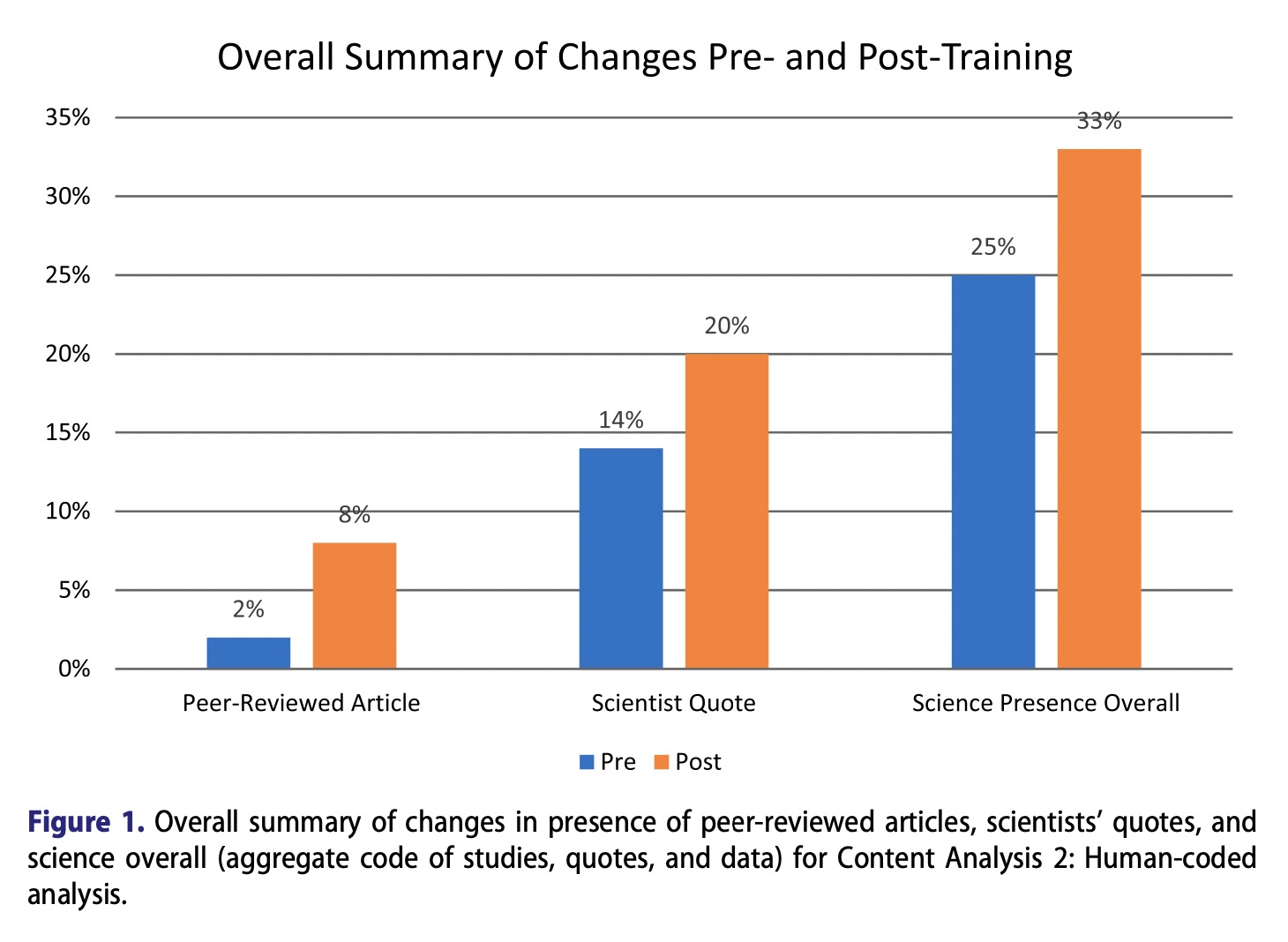

Articles had science present (aggregate code of appearance of scientists’ quotes, peer-reviewed articles, or scientific data) in 25% of articles before the training and 33% after the training.More specifically, articles featured a quote or paraphrase from a scientist in 14% of articles before the training and 20% after the training.

Articles referenced a peer-reviewed scientific publication in 2% of articles before the training and 8% of articles after the training.

So it’s fair to say that this training had a real, detectable influence on these reporters’ work. They were more likely to seek out a scientist for an interview and to quote high-quality research. Those are good things. Of course, plenty of questions remain. Will that shift last over time? Are those reporters still quoting more scientists today, two-plus years later?

And was that return-on-investment good enough for however much money SciLine spent on those 2.5 days in Iowa? That’s for funders to determine for themselves, I suppose.

One thing that marks this training as pre-COVID is that it took place in a physical location, with all the attendant costs (travel, hotels, food, all those little branded notepads at each seat). It’s much easier today to imagine a version of this happening entirely on Zoom — meaningfully reducing the cost, massively increasing the reach, and substantially decreasing the quality. If more journalistic training is destined to occur in a rectangle on a laptop screen, every element of the cost-benefit analysis is up for re-evaluation.

But it’s nice to have, at least in this one old-school form of training, evidence of actual impact.

We argue that understanding how the constellation of training, content, and effects are connected in our current media ecosystem can shape journalism and journalism training in the future. This important line of work deserves much more scholarly attention, as we are in a moment where institutions of both science and journalism are politicized in the current social context.