It takes a village for some things, but it doesn’t necessarily take a village-wide rating of a news article to increase a reader’s trust in the same piece.

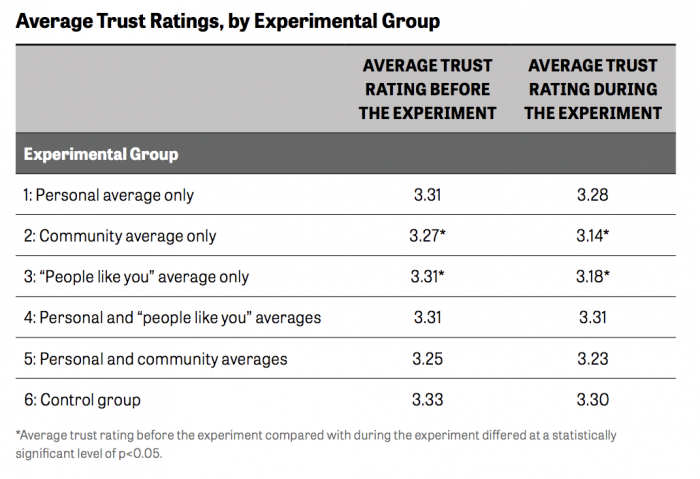

Using the same setup as a previous study that found the identification of the news organization in a link doesn’t increase trust either, researchers with Gallup and the Knight Foundation tested how users responded to average trust ratings for various news outlets. Those ratings were determined by a) the user personally, b) the community of users in the study, c) “people like you” based on demographics, and/or d) any two of the above. And of course, a control group. (Disclosure: Nieman Lab has received funding from Knight in the past.)News flash: It didn’t help.

“Opinion-based metrics that convey the general impressions of the public seem to drive confidence downward,” the researchers concluded. But they also noted “it seems that the more people know, the more skeptical they become.” Which should be a good thing, right? Right? (Bueller?)

Here’s what those ratings looked like on the testing platform:

The study involved nearly 12,000 users, who rated at least one article during the experiment in the spring. Many people felt comfortable rating around three stars:

The findings do suggest that the personal, community, and “people like you” ratings could affect an individual’s trust rating. People who only saw their specific average gave out higher trust ratings than those in other experimental groups. But seeing the ratings from others without seeing their own had a negative effect on the scores the user gave.

The experimental platform’s media spectrum — placing the “progressive research and information center dedicated to…correcting conservative misinformation in the U.S. media” Media Matters as the liberal equivalent to 100PercentFedUp, which describes itself in the site’s About section as “two moms inspired by the life of Andrew Breitbart…exposing the lies of the left & MSM propagandists” — has its own peculiarities, as my colleague Shan Wang mentioned in our previous writeup of the sister study. But trust in the right-wing end of the spectrum did increase when shown the “people like you” average, though the left end actually dropped.

“Overall, we hypothesize that showing others’ opinions, on average, through the community or ‘people like you’ feature in isolation creates cognitive dissonance that results in a user choosing to have a lower level of trust in a news article or outlet,” the researchers wrote.