After Spotify’s Joe Rogan Experience released a widely derided episode that made covid vaccines sound as safe as Russian roulette — with a guest who argued the vacinated were victims of “mass formation psychosis,” a thing that does not exist — there was pushback from many corners.

A group of doctors and scientists said Spotify enabled Rogan “to damage public trust in scientific research and sow doubt in the credibility of data-driven guidance offered by medical professionals.” Rocker Neil Young famously took his music off the service, saying, “They can have Rogan or Young. Not both.” Others with Spotify deals — Roxane Gay, Ava DuVernay, Brené Brown — ended or paused them. Even the White House got involved.

But one key source of resistance was internal — both from employees in general and from one of Spotify’s other podcasts, Science Vs. And it appears that Spotify has made sufficient tentative progress on limiting the spread of misinformation to mollify at least one group’s concerns.

First, a little backstory. Science Vs began on Australian public broadcasting in 2015, moved to Gimlet Media the following year, and was acquired with the rest of Gimlet by Spotify in 2019. Given its pledge to “blow up your firmly held opinions and replace them with science” and “to find out what’s fact, what’s not, and what’s somewhere in between,” Joe Rogan’s whole vibe around the pandemic seemed to be a mismatch.

So on January 31, host/executive producer Wendy Zukerman and editor Blythe Terrell announced a protest: They would make no new episodes of the show “until Spotify implements stronger methods to prevent the spread of misinformation on the platform” — with the exception of episodes “intended to counteract misinformation being spread on Spotify.”

Science Vs editor @blytheterrell and I just sent this email to the CEO of Spotify. pic.twitter.com/aAmZnkA1uU

— Wendy Zukerman (@wendyzuk) January 31, 2022

Since then, aside from a few rebroadcasted eps, Science Vs episodes have focused squarely on Spotify-based misinformation: tackling Rogan’s vaccine takes, Rogan’s thoughts on trans kids, and how other platforms battle it.

But on Friday, a new episode dropped that indicated things were changing. (All emphases are mine; I’ve put a fuller version of the podcast transcript at the end of this article.)

And today, some news: Spotify told us that they are improving things. They’re using some tools to prevent misinformation spreading, and one of those tools is “restricting content’s discoverability” — which should mean that if a podcast like, I don’t know, the Schmoe Schmogen Experience blathers on about how dangerous the SCHMOVID vaccines are, when the best science says something different, Spotify would restrict and limit how that episode could be discovered, making it harder for people to find.

That’s a potentially significant change — one that’s been effective on other platforms and one that might help manage Spotify’s $200 million-plus Joe Rogan problem.

Amid the Rogan vaccine blowback, Spotify CEO Daniel Ek apologized for the platform’s opaque policies and published its Platform Rules for the first time. Those rules outline a set of verboten activities, like advocating violence against a group, election manipulation, porn, and “content that promotes dangerous false or dangerous deceptive medical information that may cause offline harm or poses a direct threat to public health.”

The potential penalties for violating those rules?

What happens to rule breakers?We take these decisions seriously and keep context in mind when making them. Breaking the rules may result in the violative content being removed from Spotify. Repeated or egregious violations may result in accounts being suspended and/or terminated.

So content can be removed, and accounts can be suspended or canceled.

But what about questionable content that doesn’t get removed? After all, the original Joe Rogan vaccine episode that started all this is still on the platform, right where it’s been since the day it was published. Is there any status between “removed” and “just a normal piece of Spotify content”?

For other platforms, that status typically involves downranking where the content stands in their algorithms. If a given piece of content would normally reach n people and it’s flagged as misleading or dangerous — but not so much so to merit removal altogether — you instead only show it to (0.8)*n people, or (0.2)*n, or whatever fraction you favor. For example, Facebook’s News Feed algorithms reduce the reach of what it calls “borderline content”: “where distribution declines as content gets more sensational, people are disincentivized from creating provocative content that is as close to the line as possible.” (That is, except when someone shifts the algorithm into reverse and pumps the nasty stuff out more vigorously.) TikTok does it for “unsubstantiated claims” that fact-checkers can’t confirm. Search engines like Google and DuckDuckGo do it for search results from sources like Russian state media. In 2019, YouTube began “reducing recommendations of borderline content and content that could misinform users in harmful ways,” and the spread of misinformation declined in significant ways.

The strategy is hardly perfect — you still have to figure out what to downrank! — but it’s a reasonable response to material that pushes the line but doesn’t cross it. That Spotify is willing to restrict “content’s discoverability” is a valuable tool in its toolbox when it comes to misinformation.

There was no public announcement of a new Spotify misinformation policy, so I asked the company if this was, in fact, a change. I got back this statement, judge for yourself:

At Spotify, our goal is to strike a balance between respecting creator expression and the diverse listening preferences of our users while minimizing the risk of offline harm.There are a variety of actions that we can take, in line with our Platform Rules, to accomplish this goal, including removing content, restricting content’s discoverability, restricting the ability of content to be monetized, and/or applying content advisory labels.

When content comes close to the line but does not meet the threshold of removal under our Platform Rules, we may take steps to restrict and limit its reach.

(Update: Spotify got back to me Monday evening, saying that these policies about reach restriction are not new, but that the company is being more open about it “as part of our broader effort to increase transparency.” It’s not clear, then, why the Science Vs would see this as any improvement in limiting the spread of misinformation.)

That’s confirmation — but how formal of a change is it? After all, Spotify’s canonical Platform Rules don’t make any mention of these new restrictions on reach.

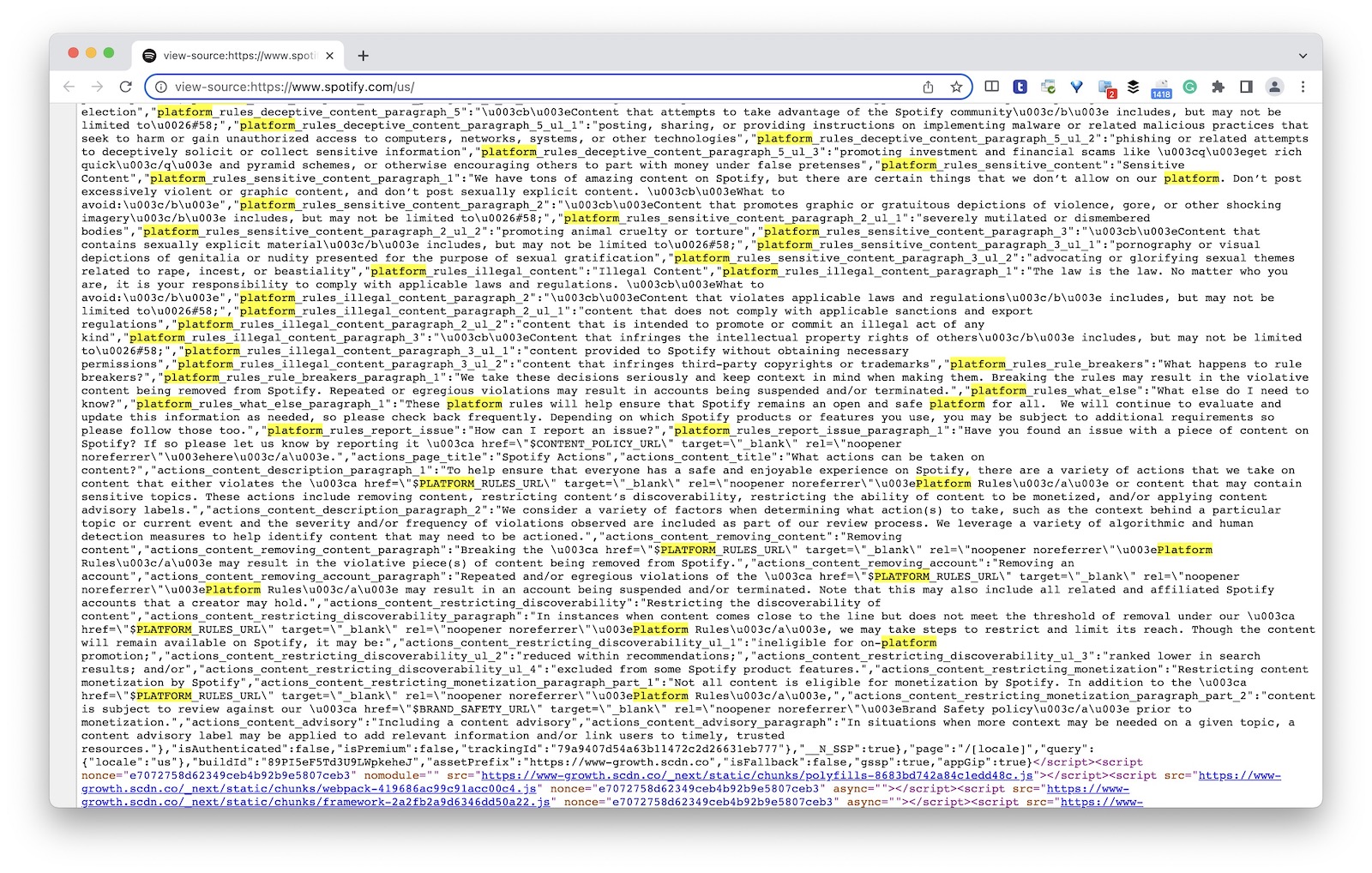

But ah, that’s not the only version of the company’s Platform Rules. The underlying code of Spotify’s homepage also includes the Platform Rules, encoded in JavaScript at the foot of its homepage. And…it’s a different set of rules!

(Update: Monday evening, Spotify told me that the embedded-in-JavaScript rules on the homepage actually represent two different documents, the Platform Rules and Spotify Actions, which live on their own webpage. Fair enough. I’d note, though, that that Spotify Actions page has never been indexed by Google, never been crawled by the Wayback Machine, and never been tweeted, ever. The backlink-checker Ahrefs says no one has linked to the page from any other page, ever.)

If you View Source on spotify.com/us, you can see the code version near the bottom of the HTML:

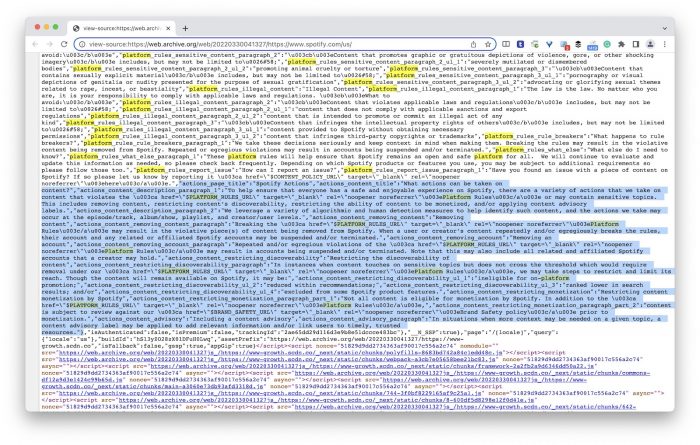

This is what that JavaScript looked like on March 29, via the indispensible Wayback Machine:

And here’s what it looked like the next day, March 30 — the entire highlighted section is new:

If you strip it out of JavaScript, here’s the new section that lays out how Spotify might downrank problematic content:

Spotify Actions

What actions can be taken on content?

To help ensure that everyone has a safe and enjoyable experience on Spotify, there are a variety of actions that we take on content that violates the Platform Rules or may contain sensitive topics. This includes removing content, restricting content’s discoverability, restricting the ability of content to be monetized, and/or applying content advisory labels.

We leverage a variety of algorithmic and human detection measures to help identify such content, and the actions we take may occur at the episode/track, album/show, playlist, and creator/user levels.

Removing content

Breaking the Platform Rules may result in the violative piece(s) of content being removed from Spotify. When a user or creator’s content repeatedly and/or egregiously breaks the rules, their account and any related or affiliated Spotify accounts may be suspended and/or terminated.

Removing an account

Repeated and/or egregious violations of the Platform Rules may result in accounts being suspended and/or terminated. Note that this may also include all related and affiliated Spotify accounts that a creator may hold.

Restricting the discoverability of content

In instances when content touches on sensitive topics but does not cross the threshold which would require removal under our Platform Rules, we may take steps to restrict and limit its reach. Though the content will remain available on Spotify, it may be:

- ineligible for on-platform promotion;

- reduced within recommendations;

- ranked lower in search results; and/or

- excluded from some Spotify product features.

Restricting content monetization by Spotify

Not all content is eligible for monetization by Spotify. In addition to the Platform Rules, content is subject to review against our Brand Safety policy prior to monetization.

Including a content advisory

In situations when more context may be needed on a given topic, a content advisory label may be applied to add relevant information and/or link users to timely, trusted resources.

It is not clear to me why these additions aren’t included in the public Platform Rules — the ones Spotify only published after saying that “admittedly, we haven’t been transparent around the policies that guide our content” — but they seem perfectly sensible to me, in line with what other platforms have done. (I’ve asked Spotify about the JavaScript-only thing.)

So if Spotify is going to downrank some close-to-the-line material, is Rogan’s vaccine episode one of the pieces of content having its discoverability and reach limited?

I asked Spotify this directly, and I’ll update here if they respond. (Update: Monday evening, Spotify told me that “as a general practice, we don’t comment on specific content actions.”)

But some quick searching suggests that yes, they are — at least in search results.

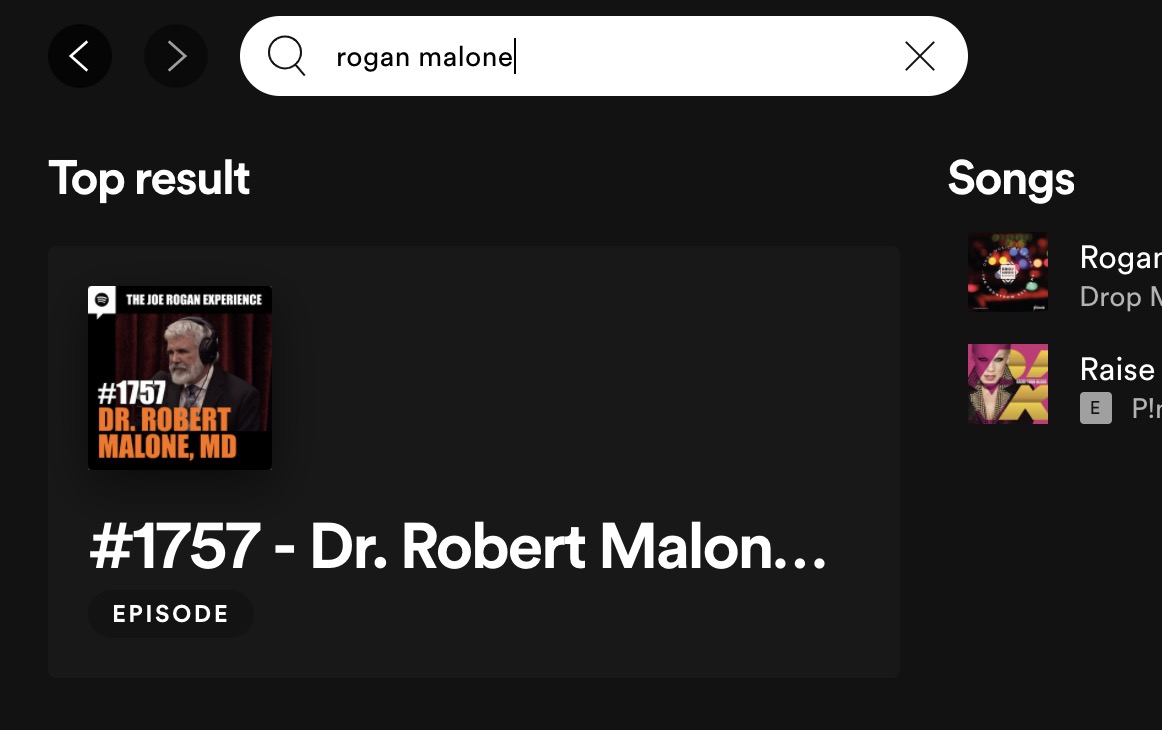

A Spotify search for “rogan malone” — the episode’s guest was named Robert Malone — still turned up the vaccine episode as the top result. That suggests it hasn’t been nuked out of search results entirely.

But an episode search for “rogan vaccines” — which one might expect to return Joe Rogan episodes about vaccines! — instead returned dozens of episodes of other podcasts talking about Joe Rogan episodes about vaccines. (A healthy chunk of which are of the “Joe Rogan is right, vaccines will murder your children as they sleep” variety, by the way. The Science Vs episode debunking the Rogan vaccines episode shows up as the 15th result for me.)

Fifteen of the top 100 results are clips from Rogan’s show, but there were no any actual full Rogan episodes. And of the dozen-or-so Rogan clips that I found, none were from the Robert Malone episode — strongly suggesting it and other vaccine-related Rogan episode has been downranked in search results.

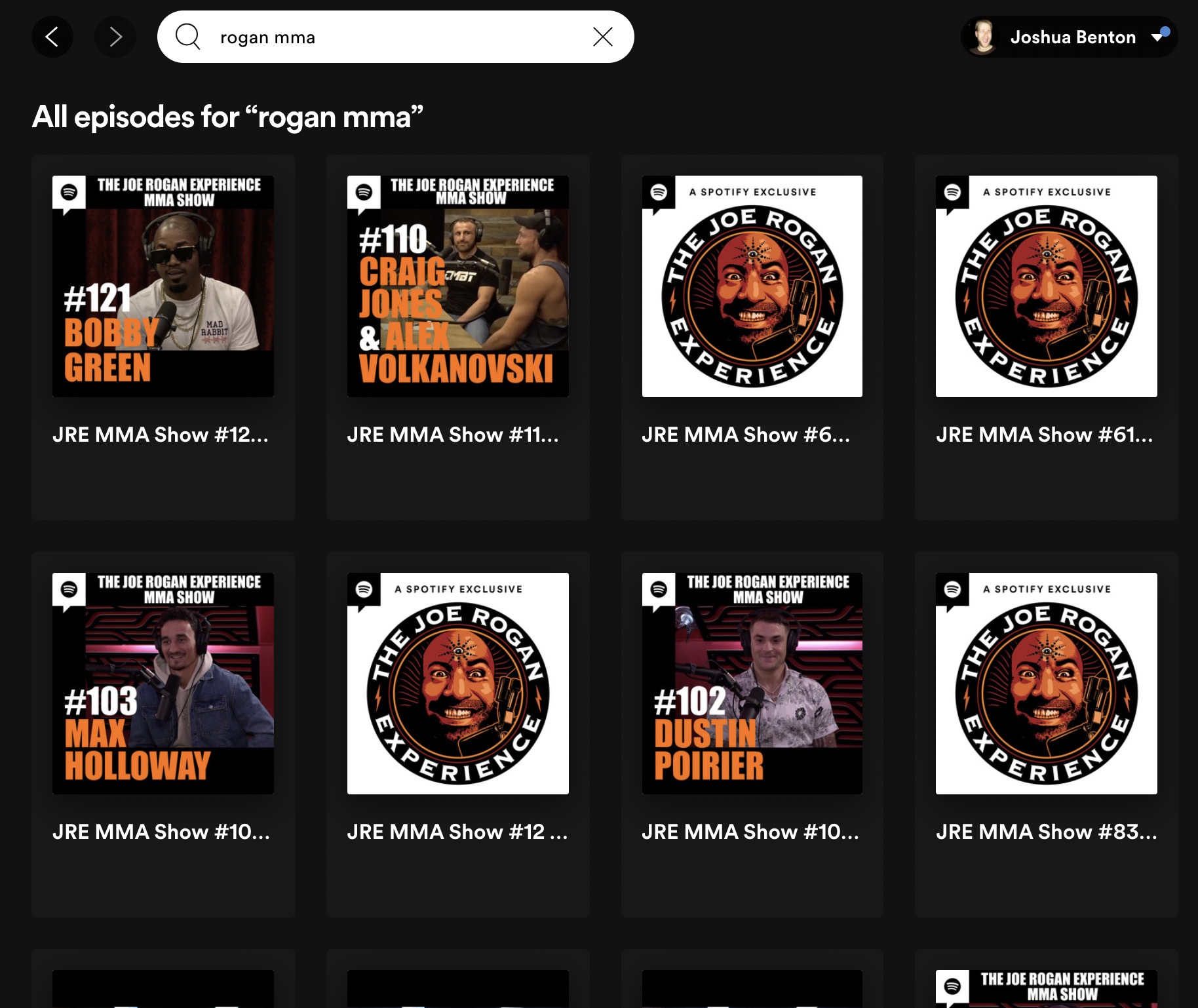

Compare that to a search for another topic Rogan discusses a lot — “rogan mma” — where the top episode results were nearly all Rogan. Search results No. 1 through 50 were all official Rogan episodes (and I just stopped counting at No. 50 — there were more).

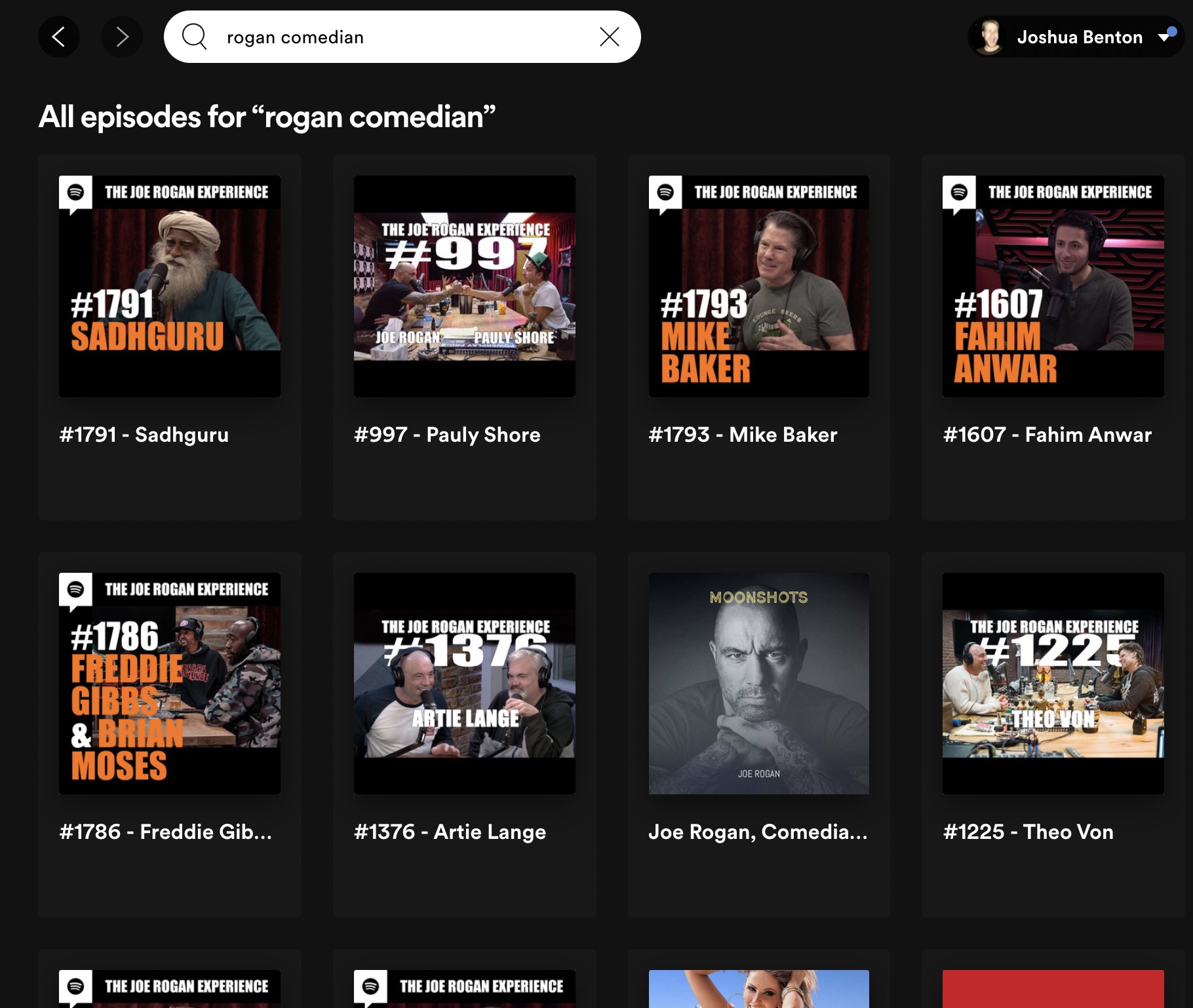

Or try “rogan comedian” — tons of Rogan episodes in the results. (I did not expect to see “yogi, mystic and visionary” Sadhguru coming in at No. 1 for a “rogan comedian” search, I admit. Then again, he believes “cooked food consumed during a lunar eclipse depletes the human body’s pranic energies,” so he’s sort of on brand.)

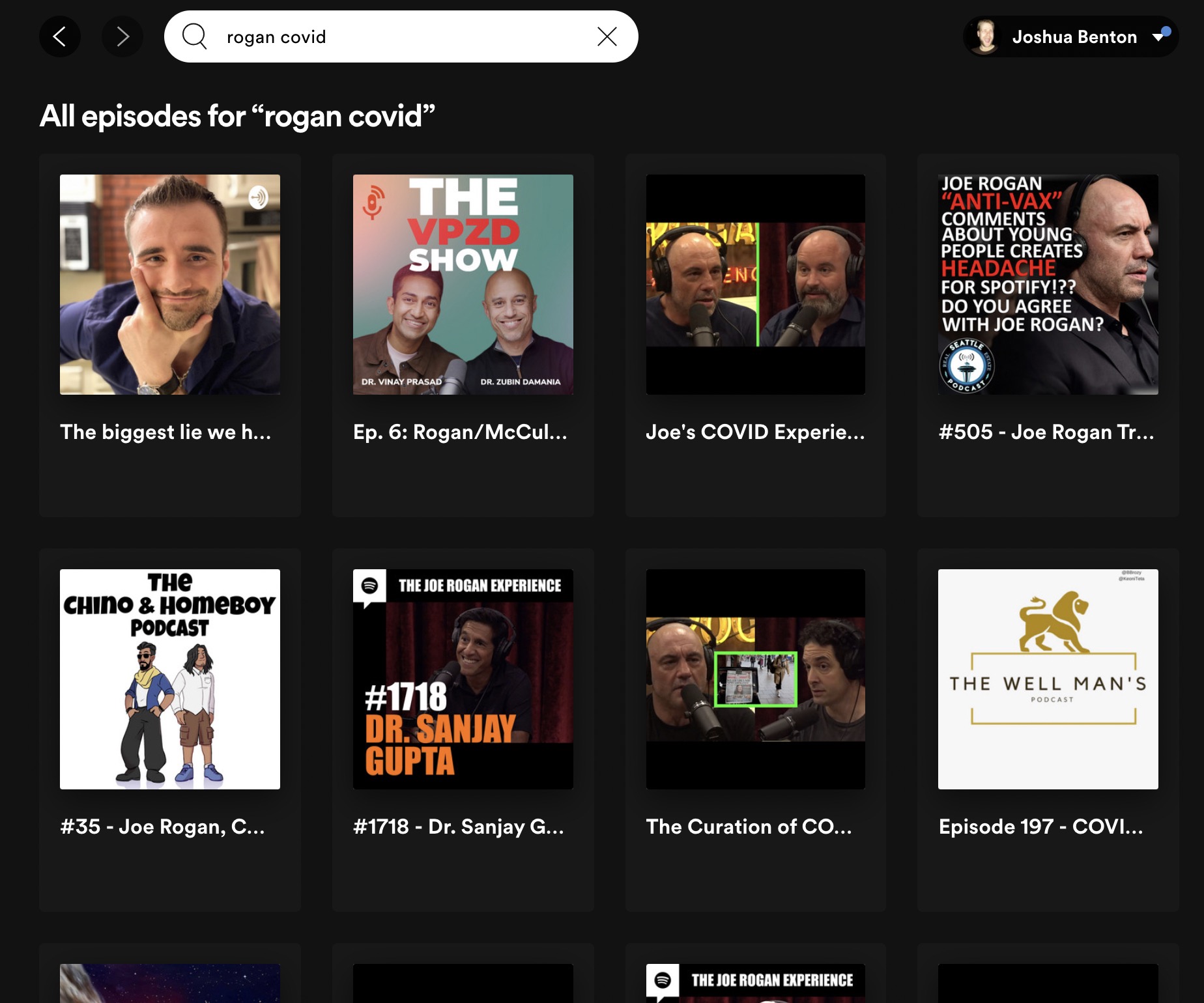

Or try a more direct comparison: “rogan covid.” There are some Rogan episodes in here, but lots of other stuff, too. The Malone episode is nowhere to be found. Here are all the Rogan full-episode interviewees who show up in the top 100 results, in order: Sanjay Gupta, Peter Attia, Rhonda Patrick, Michael Osterholm, Dave Chappelle, Ben Shapiro. That’s it. (The top Rogan clip returned is from Alex Berenson, unfortunately.)

There is a roughly zero chance that Spotify’s search algorithms — without any external constraints — wouldn’t consider this incredibly popular and controversial episode as one of the top results for “rogan vaccines” or “rogan covid.” That strongly suggests Spotify is following the lead of other platforms and reducing misinformation’s reach and discoverability.

To be clear: The main way an enormously popular podcast like Joe Rogan’s gets consumed is via the millions of people who subscribe to it. It’s not through search requests or recommendation engines. In that context, limiting reach can’t be nearly as effective as on more algo-driven platforms like Facebook or YouTube.

Still, it’s a good move on Spotify’s part — one worth being public about.

Here’s a longer transcript of the relevant portions of the Science Vs episode mentioned above.

Hey mates: today on the show, an update about our push to get Spotify to do more to stop misinformation spreading on the platform.As a little recap, a few months ago, Joe Rogan dropped this episode on his podcast about the COVID vaccines, and made them look really scary — didn’t line up with the science. And his podcast is exclusive to Spotify, who were also our employers. A lot of people got up in arms about all of this, and it frustrated us too. So Science Vs editor Blythe Terrell and I sent a letter to the CEO of Spotify, saying basically, that the company needs to do more here. And we said that until they step up, we’re only going to make new episodes that highlight misinformation on Spotify. Since then, we tackled misinfo on Joe Rogan’s show about kids who are transgender. And of course, we looked at the COVID vaccines…

We’ve also been talking to our colleagues at Spotify, spreading the nerd word about what they should do here, like how they need to change their algorithm to make it harder for misinformation to spread.

And today, some news: Spotify told us that they are improving things. They’re using some tools to prevent misinformation spreading, and one of those tools is “restricting content’s discoverability” — which should mean that if a podcast like, I don’t know, the Schmoe Schmogen Experience blathers on about how dangerous the SCHMOVID vaccines are, when the best science says something different, Spotify would restrict and limit how that episode could be discovered, making it harder for people to find.

All in all, this is not perfect, but it sure is a start. And so, next week, on Thursday, we have one final banger of an episode about some misinformation being spread on Spotify. And then, after that, we’ll be back with our regular show.