What if we created a “ChatRoulette for news” that generated content we tended to disagree with — but was also targeted toward our regular levels and sources of news consumption? How hard would it be?

What if we created a “ChatRoulette for news” that generated content we tended to disagree with — but was also targeted toward our regular levels and sources of news consumption? How hard would it be?

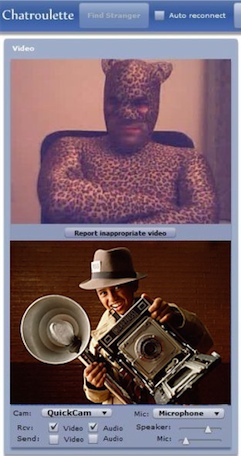

For the last 24 hours or so, the Twitter-sphere has been buzzing over Daniel Vydra‘s “serendipity maker,” an off-the-cuff Python hack that draws on the APIs of the Guardian, New York Times, and Australian Broadcasting Corp. in order to create a series of “news roulettes.” In sum, hit a button and you’ll get taken to a totally random New York Times, Guardian, or ABC News story. As the Guardian noted on its technology blog, “the idea came out of a joking remark by Chris Thorpe yesterday in a Guardian presentation by Clay Shirky that what we really need is a ‘Chatroulette for news'”:

After all, we do have loads of interesting content: but the trouble with the way that one tends to trawl the net, and especially newspapers, simply puts paid to the sort of serendipitous discovery of news that the paper form enables by its juxtaposition of possibly unrelated — but potentially important — subjects.

This relates to the much-debated theoretical issue of “news serendipity,” summarized here by Mathew Ingram. In essence, the argument goes that while there is more news on the web, our perspectives on the news are narrower because we only browse the sites we already agree with, or know we already like, or care about. In newspapers, however, we “stumbled upon” (yes, pun intended) things we didn’t care about, or didn’t agree with, in the physical act of turning the page.

As Ryan Sholin has been pointing out all morning on Twitter, the idea of a “serendipity maker” for the web isn’t entirely new. And I don’t know if the current news roulettes really solve the problem journalism theorists are concerned about. So I’d like to know: What would it take to create a news serendipity maker that automatically knew and “factored in” your news consumption patters, but then showed you web content that was the opposite of what you normally consumed?

For example, I’m naturally hostile to the Tea Party as a political organization. What if someone created a roulette that automatically generated news content sympathetic to the Tea Party? And what if they found a way to key it to my news consumption patterns even more strongly, i.e., if somehow the roulette knew I was a regular New York Times reader and would pick Tea Party friendly articles written either by the Times or outlets like the Times (rather than, say, random angry blog posts?)

I think this is interesting, because it would basically hack the entire logic of the web. The beauty of the web is that it can direct you towards ever more finely grained content which is exactly what you want to read. It would somehow know what you wanted even before you did. In other words, it might be the opposite of what Mark S. Luckie called “a Pandora for news.” And it would solve a very real social problem — or at least a highly theorized social problem — what Cass Sunstein calls the drift towards a “Daily Me” or “Daily We,” where we only read news content we already agree with, and our political culture suffers as a result.

So. This is a shout out for news hackers, developers, and others to weigh in: How hard would it be to create a machine like this? How would you do it? Would you do it? I would really like to write a longer post on this, based on your replies. So feel free to chime in in the comments section, or email me directly with your thoughts. I’d like to include them in my next post.