The report divides discussion of data into three sections, starting at the very core of what data is, with questions around the idea of “quantification.” For instance, here’s Stray on measurement error:

Data doesn’t speak for itself, and the second section of Stray’s book, centered around data analysis or interpretation, runs through real-world policy examples, such as whether imposing earlier closing time for bars in New South Wales actually reduced drunken nighttime assaults. Stray warns of reading significance in data when it’s just pure coincidence, or not properly eliminating other possible explanations for why some data looks the way it does.In practice, nothing can be measured perfectly.

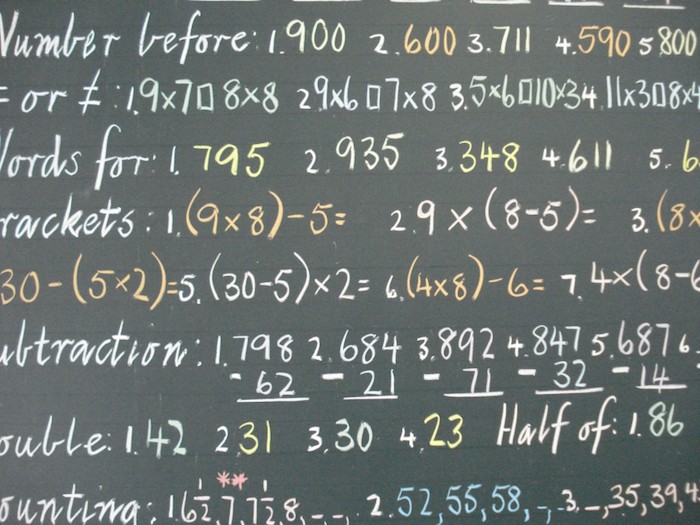

A random sample has a margin of error due to sampling, but every quantification has error for one reason or another. The length of a table cannot be measured much finer than the tick marks on whatever ruler you use, and the ruler itself was created with finite precision. Every physical sensor has noise, limited resolution, calibration problems, and other unaccounted variations. Humans are never completely consistent in their categorizations, and the world is filled with special cases. And I’ve never seen a database that didn’t have a certain fraction of corrupted or missing or simply nonsensical entries, the result of glitches in increasingly complex data-generation workflows.

Error creeps in, and the data never quite matches the description on the box. Anyone who works with data has had this beaten into them by experience.

Even simple counts break down when you have to count a lot of things. We’ve all sensed that large population figures are somewhat fictitious. Are there really 536,348 people in your hometown, as the number on the “Welcome To …” sign suggests?

The method of competing hypotheses need not involve data at all. You can apply the idea of ruling out hypotheses to any type of reporting work, using any combination of data and non-data sources. The concept of triangulation in the social sciences captures the idea that a true hypothesis should be supported by many different kinds of evidence, including qualitative evidence and theoretical arguments. That too is a classic idea…

What you see in the data cannot contradict what you see in the street, so you always need to look in the street. The conclusions from your data work should be supported by non-data work, just as you would not want to rely on a single source in any journalism work.

The story you run is the story that survives your best attempts to discredit it.

In a third section, Stray explores data visualization, from how humans perceive and make sense of data that’s presented to them (“We can’t possibly study the communication of data without studying the human perception of quantities”). Communicating uncertainty around data is important, and so is making rigorously supported predictions:

Yet most journalists think little about accountability for their predictions, or the predictions they repeat. How many pundits throw out statements about what Congress will or won’t do? How many financial reporters repeat analysts’ guesses without ever checking which analysts are most often right? The future is very hard to know, but standards of journalistic accuracy apply to descriptions of the future at least as much as they apply to descriptions of the present, if not more so. In the case of predictions it’s especially important to be clear about uncertainty, about the limitations of what can be known.

The book was released at a launch event Thursday night featuring panelists Meredith Broussard of New York University, Mark Hansen of the David and Helen Gurley Brown Institute for Media Innovation at Columbia University, and Scott Klein of ProPublica. You can The Curious Journalist’s Guide to Data in its entirety here.

Talking journalism + data @jonathanstray presents his Curious Journalists' Guide To Data https://t.co/JY0DHwKM3J pic.twitter.com/FajYe2LH2S

— Tow Center (@TowCenter) March 24, 2016