When it comes to emerging technologies, there’s a lot to keep newsrooms busy. Virtual reality has promise, and so do chatbots. Ditto, too, for connected cars and connected homes. In fact, the challenge for most newsrooms isn’t figuring out potential new platforms for experimentation but rather determining which new technologies are worth prioritizing most.

At The New York Times, anticipating and preparing for the future is a job that falls to Story[X], the newsroom-based research and development it launched last May. A “rebirth” of the R&D Lab the Times launched in 2006, Story[X] was created to look beyond current product cycles to how the the Times can get ahead of developments in new technology. (The previous R&D Labs’ Alexis Lloyd and Matt Boggielanded just fine, taking high positions at Axios.)

Heading up the unit, which will total six people when fully staffed, is Marc Lavallee, the Times’ former head of interactive news. Lavallee said that while Story[X] will always ask how practical new technologies are, the group is more likely to err on the side of the speculative than the safe — a mental model that isn’t always easy to adopt in newsrooms full of people trained to ask pointed questions like: “Is this even real?”

While that skeptical lens is helpful, “we want to have a sense that, even if we feel like something isn’t ready today, if we feel like there’s some sense of inevitability, we want be thinking about it and experimenting,” Lavallee said. A lot of these developments will be outside the Times’ control, but if Story[x] does its job properly, there will be “fewer fire drills induced by some keynote from some tech company that changes the game and requires us to play catchup.”

In a wide-ranging conversation, Lavallee and I spoke about how the Times evaluates new technologies, which areas he believes are most ripe for expermentation, and what technology news organizations aren’t paying enough attention to. Here’s an edited and condensed transcript of our conversation.

Ricardo Bilton: What’s the overall mandate of Story[X]? How is it different from that of the earlier R&D Lab?

Lavallee: I think about the mandate in terms of who we’re here to serve. I want to help empower three customers in the organization: There’s the newsroom as one customer, there’s the technology and product design group, and there’s the advertising side. For the newsroom, we’re focused on understanding what are we trying to accomplish in our reporting over the next couple of years from a production perspective — things that are little more behind the scenes — and also from a consumption perspective as well.

The other angle is how do we look a little bit further out. This gets a little speculative, and I think we’re trying to stay out the prediction game, but I think we can say that some point, Magic Leap or someone else — we’ll have connected glasses or some sort of mixed reality kind of thing on the market. We have to think about the ways we can use the experiments we’re doing in 2017 to help inform how we would take advantage of those new things. So we’re thinking about the future and tying it back to the present, so that our current experiments actually help us build that kind of strategy to be poised for some of those things that feel increasingly inevitable. The bulk of what we’re trying to do is support that storytelling mission in the newsroom.

Bilton: One big part of the Story[X] approach has been to spend each quarter focusing on one big idea. What have you looked at so far?

Lavallee: In the fourth quarter last year, we spent a lot of time looking at

computer vision, because it’s at the intersection of a lot of opportunities and rapid evolution in the machine learning space, but it’s also a key component of

augmented reality. We did some stuff there to try to understand how what’s commercially available could help with our daily needs, but also around tentpole coverage like the Olympics and elections.

Bilton: What’s your approach for looking at each of these things? Is it problem-first or technology-first?

Lavallee: We don’t want to just look emerging tech and say, “How do we bring computer vision to The New York Times?” Instead, we’re trying to start with the problems we see inside and outside the building — and ideally not just today’s problems, but the ones tomorrow that we can anticipate. Then it’s figuring out how various emerging technologies can be solutions to those problems. It’s that problem–solution fit that we’re trying to figure out how to apply with a bit of a future-looking lens, so that we’re not creating these cool things in a vacuum that may never be useful.

Bilton: That’s always been one of my issues with the industry’s approach to innovation, which often seems done just for the sake of innovation. Everyone can be a little bit guilty of it. How do you avoid that temptation to innovate without grounding projects in real problems that the newsroom is facing?

Lavallee: There’s always the danger of getting really caught up in your solution and pushing it all the way to readers before looking back on it and realizing it was kind of faddish. On the other hand, one thing that I’m focused on at the outset is making sure we don’t lean too practical, at in least how we’re thinking about things internally.

Bilton: Is there a good example of how you’ve used this approach so far?

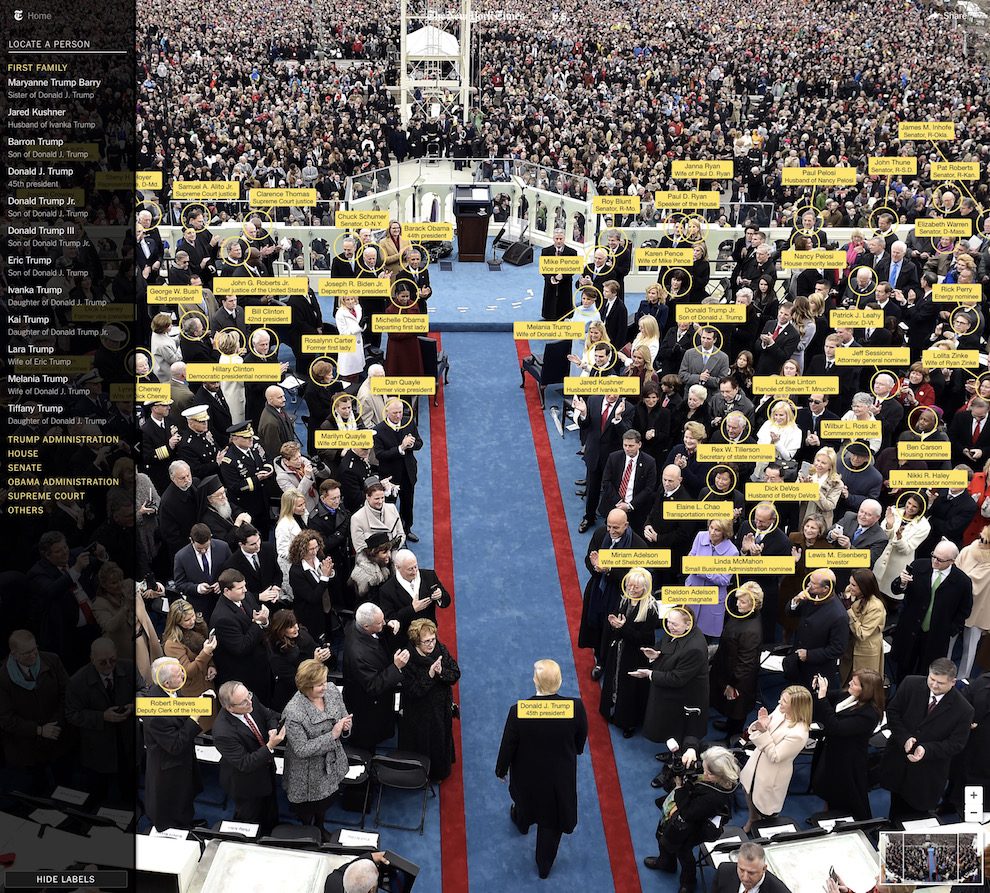

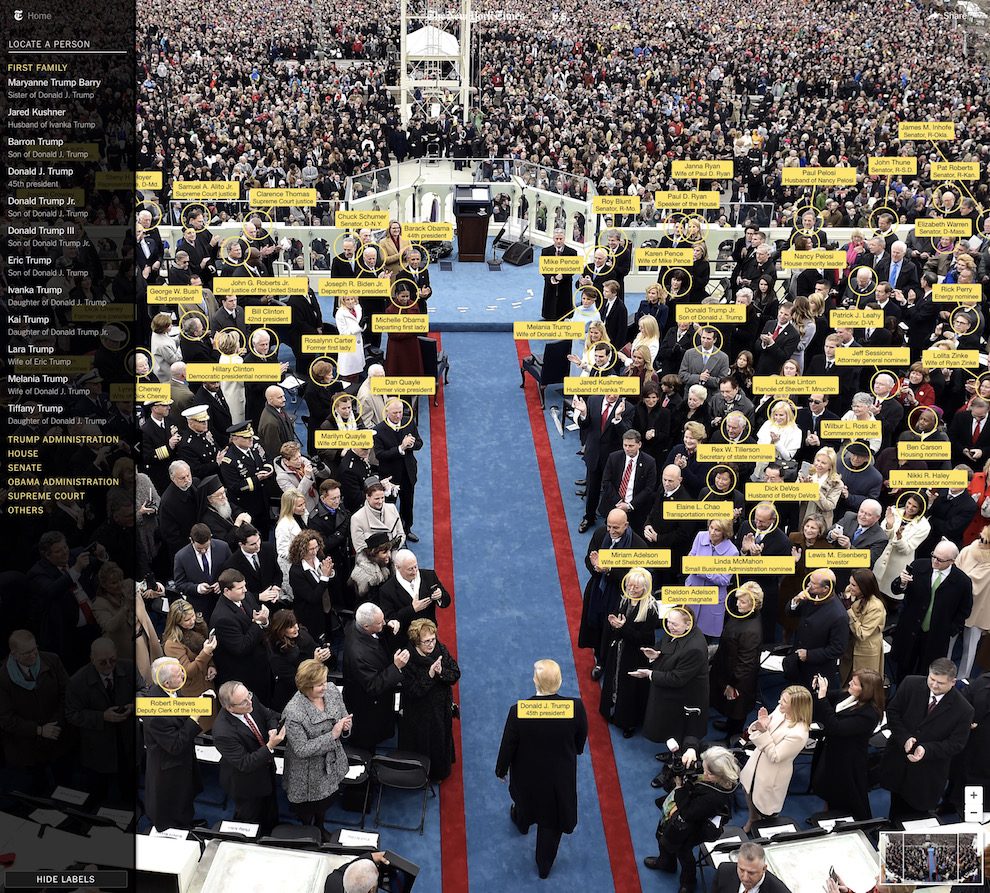

Lavallee: One idea we’ve gotten through the entire pipeline is this huge

interactive photo of everyone at the presidential swearing-in ceremony. We tag all the people so you can see who is sitting where. For the past two cycles, that process has been entirely manual. Someone had the thankless job of drawing rectangles around people’s heads and someone else has the job of going around the D.C. bureau asking, “Who’s this old person?”

So this past January we built a model using

Microsoft’s Computer Vision API where we trained it using photos of various members of Congress and other people we expected to be there. The idea was that it could do a first pass on the detection of faces, draw the rectangles etc. Humans were still in the mix, but the software did the first pass and they were more in a conformational role. It saved us a lot of time.

It was important, because one of the ways that we’re looking at these emerging technologies is by finding things that we are already planning to do from a coverage perspective and can pull off even if the new piece doesn’t come through in the right way. We think of it has having a turbo button for being able to do it faster and better. We want to make it so that whatever we learn from these experiments actually finds its way into larger planning and strategy.

Bilton: Can you give me a sense of what areas you want to focus on? There are so many different things that the Times and others are pushing into — bots, connected cars, virtual reality, augmented reality. How do you think about what’s most important?

Lavallee: A big thing we consider is how much influence the Times can have on the evolution of a technology. But on the other hand, there are other things that are going to happen that we need to be able to respond to. The New York Times is not going to build a self-driving car, but if we see that technology as being inevitable, we should have a strategy for how The New York Times will fit into it.

In many cases, we’re starting with the consumer. What are the spaces of your life, say, three years out? What that comes down to is your home, your workplace, and when you’re on the go. We’re trying to envision what your day will look like in a couple of years and find new types of experiences you will have with The New York Times in those contexts. In other words, we start by anticipating user needs and walking back to the technologies.

Bilton: So what are the big platforms right now that you think have the most potential?

Lavallee: We’re going to spend a lot of time looking into connected home stuff that is nearer-term. It’s interesting to watch, with devices like the Amazon Echo and Google Home, how the whole skill discovery process is developing and if people are starting to use these devices in ways that are beyond the features that are listed on the box. Cooking is a common use case that’s kicked around — the idea that you could have an audio guide while you’re cooking a recipe. That’s something I think we can do a little bit of prototyping around.

It does feel to me that the notion of your home as a physical space will go from being relatively static to your home feeling the same way software feels like, where it’s always updating with new features. I have a sense that we will hit a transition point in the next several years where our homes feel like things that evolve the same way that software evolves. A lot of the stuff will just happen in the background. What we’ll do in the third quarter of this year is try to figure out what aspects of the connected-home future should we sense are inevitable, try to design some experiences that we think could exist in that world, then apply that reality filter based on actual evidence of what’s possible today.

Bilton: The Times has developed a number of VR projects over the past year, and that’s something where people see potential for news. The question is how far away are we from that potential being realized. Where’s the potential?

Lavallee: With VR, we’re doing a lot of experimenting around telling individual stories that bring readers to another place. There’s a rich vein of exploration to be done there. I don’t think we’ve even fully explored that. That’s a good place to be working while we wait to see what the adoption of the current and next generation of these devices is.

The reason why I’m fixated on whether there are non-linear stories for us in VR is that that, to me, feels like the thing that’s actually going to drive wider adoption. Social experiences and gaming to me feel like the way that we’ll see this become more of a mass experience, I think. And I’m not sure that there’s necessarily a thing for us to do there. We have to keep doing what were doing and wait for other parts of the ecosystem to flesh out.

I do think that there is a tremendous potential for us in the AR space. That’s where we can do things that are more utility-driven, which is where we’re seeing today’s pickup through, for example, being able to place a virtual IKEA couch in your living room to see if it would fit.

Bilton: We haven’t talked too much about advertising, which is also a part of the mandate at Story[x]. What are the potential innovations there?

Lavallee: There are a couple of ways we’re working through it. I’m of the opinion that the full scope of the potential for the The New York Times in the 21st century is incredibly broad, because we have this brand flexibility. It’s something that is basically with you all day every day and helping guide every decision you make and being that trusted ally in your life.

We’re not going to do that alone. It does require a different kind of partnership with a bunch of different kinds of companies. The tech space is the easiest to find those kinds of opportunities. I would say the partnership with Samsung is the first of a genre of partnership that we’ll see much more of over the next couple of years, where neither of us would be able to do something like that 360 video of that scale alone. But together, we can each play our part in speeding the evolution of the technology and content in parallel, as opposed to waiting for one to happen and then doing the other.

Bilton: We’ve talked about a lot of different potential areas of innovation. Is there one that you don’t hear as much chatter about that you think has a lot of promise?

Lavallee: There’s a cluster of ideas that combine what’s happening in the

quantified self movement and what’s happening in your brain at any point in time. That leads to the kind of

brain-machine interface stuff that Facebook was demoing last month. They’re saying that within two years they’ll have a skull cap that will let you think at 100 words per minute.

I see that as technology that will let us understand how much attention you’re paying while reading or listening to something, what you retained, what you perk up at, and how the content experience can adapt and understand what kind of learner you are. I think there is tremendous potential to do that so the content is more tailored to your level of interest. That’s something that I’m not aware of media organizations diving into yet, but I think it’s a huge frontier for us. Over the next few years we’re going to be thinking a lot more about what’s going on inside readers’ heads.

Photo of Google Cardboard VR by

Othree used under a Creative Commons license.