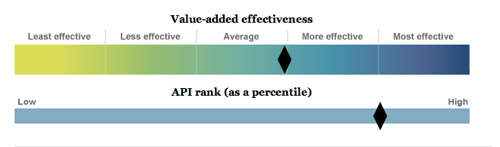

Not so long ago, a hefty investigative series from the Los Angeles Times might have lived its life in print, starting on a Monday and culminating with abig package in the Sunday paper. But the web creates the potential for long-from and in-depth work to not just live on online, but but do so in a more useful way than a print-only story could. That’s certainly the case for the Times’ “Grading the Teachers,” a series based on the “value-added” performance of individual teachers and schools. On the Times’ site, users can review the value-added scores of 6,000 3rd- through 5th-grade teachers — by name — in the Los Angeles Unified School District as well as individual schools. The decision to run names of individual teachers and their performance was controversial.

The Times calculated the value-added scores from the 2002-2003 school year through 2008-2009 using standardized test data provided by the school district. The paper hired a researcher from RAND Corp. to run the analysis, though RAND was not involved. From there, in-house data expert and long-time reporter Doug Smith figured out how to present the information in a way that was usable for reporters and understandable to readers.

As might be expected, the interactive database has been a big traffic draw. Smith said that since the database went live, more than 150,000 unique visitors have checked it out. Some 50,000 went right away and now the Times is seeing about 4,000 users per day. And those users are engaged. So far the project has generated about 1.4 million page views — which means a typical user is clicking on more than 9 pages. That’s sticky content: Parents want to compare their child’s teacher to the others in that grade, their school against the neighbor’s. (I checked out my elementary school alma mater, which boasts a score of, well, average.)

To try to be fair to teachers, the Times gave their subjects a chance to review the data on their page and respond before publication. But that’s not easy when you’re dealing with thousands of subjects, in a school district where email addresses aren’t standardized. An early story in the series directed interested teachers to a web page where they were asked to prove their identity with a birth date and a district email address to get their data early. About 2,000 teachers did before the data went public. Another 300 submitted responses or comments on their pages.

“We moderate comments,” Smith said. “We didn’t have any problems. Most of them were immediately posteable. The level of discourse remained pretty high.”

All in all, it’s one of those great journalism moments at the intersection of important news and reader interest. But that doesn’t make it profitable. Even with the impressive pageviews, the story was costly from the start and required serious resource investment on the part of the Times.

To help cushion the blow, the newspaper accepted a grant from the Hechinger Report, the education nonprofit news organization based at Columbia’s Teachers College. [Disclosure: Lab director Joshua Benton sits on Hechinger’s advisory board.] But aside from doing its own independent reporting, Hechinger also works with established news organizations to produce education stories for their own outlets. In the case of the Times, it was a $15,000 grant to help get the difficult data analysis work done.

I spoke with Richard Lee Colvin, editor of the Hechinger Report, about his decision to make the grant. Before Hechinger, Colvin covered education at the Times for seven years, and he was interested in helping the newspaper work with a professional statistician to score the 6,000 teachers using the “value-added” metric that was the basis for the series.

“[The L.A. Times] understood that was not something they had the capacity to do internally,” Colvin said. “They had already had conversations with this researcher, but they needed financial support to finish the project.” (Colvin wanted to be clear that he was not involved in the decision to run individual names of teachers on the Times’ site, just in analyzing the testing data.) In exchange for the grant, the L.A. Times allowed Hechinger to use some of its content and gave them access to the data analysis, which Colvin says could have future uses.

At The Hechinger Report, Colvin is experimenting with how it can best carry out their mission of supporting in-depth education coverage — producing content for the Hechinger website, placing its articles with partner news organizations, or direct subsidies as in the L.A. Times series. They’re currently sponsoring a portion of the salary of a blogger at the nonprofit MinnPost whose beat includes education. “We’re very flexible in the ways we’re working with different organizations,” Colvin said. But, to clarify, he said, “we’re not a grant-making organization.”

As for the L.A. Times’ database, will the Times continue to update it every year? Smith says the district has not yet handed over the 2009-10 school year data, which isn’t a good sign for the Times. The district is battling with the union over whether to use value-added measurements in teacher evaluations, which could make it more difficult for the paper to get its hands on the data. “If we get it, we’ll release it,” Smith said.