One day fifteen long years ago, in 2004, some SEO consultants decided to have a contest to determine quién es más macho in the gaming-search-results game. As they put it: “Are you a Player or a Stayer?”

Everyone knows that Google has changed the way they rank sites. Now that the algorithm’s changed and everyone is competing again can you take a page to #1? More importantly, can you keep it there? Together with SearchGuild.com, we’ve put together a competition titled “SEO Challenge” to sort the Players from the Stayers.

DarkBlue.com is giving away an Apple Mini iPod™ and a Sony Flat Screen Monitor to any Webmaster or SEO who can take their page to #1 for the search term “TBA”. Get there any way you can to win a prize.

(Nothing timestamps a contest quite like an iPod mini as top prize.)

The idea was that, at a set time, the organizers, a company called DarkBlue, would announce a phrase that didn’t appear anywhere on the web. From that point and over a few weeks, competitors would try their best to make one of their webpages be the top Google search result for that phrase. To the winner went glory and the aforementioned iPod.

When the phrase was announced — “nigritude ultramarine,” a play on “DarkBlue” — swarms of SEO types filled the web with it and tried their own techniques to get their version to the top. As Search Engine Journal wrote at the time, the contest:

…is likely to bring out the best and the worst in optimization tactics. The black magic optimization techniques that are suspected in having sites banned from Google are likely to be exercised, along with tried and true optimization practices. Immediately after the contest was announced experts snapped up hyphenated domain names and began tweaking their text. Many experts were surprised to see how quickly ‘nigritude ultramarine’ made its way into the Google’s listings, with new listings appearing daily it is clear that the contest is well underway.

The experts will be at the mercy of Google and any new algorithms implemented over the course of the next two months. Experts will have to anticipate and update on a regular basis, to ensure that they are able to obtain and retain a strong listings.

But the winner of the SEO Challenge wasn’t a black-hat consultant or a stack of Macedonian children in a trench coat. It was a blogger. Anil Dash, who at the time worked at the blog company Six Apart, wasn’t a particular fan of SEO types, many of whom he considered “barely above spammers.” So he decided to put up a single blogpost, headlined “Nigritude Ultramarine,” and asked his readers to link to it. Many did. And that combination — the Google cred Anil had already built up as an active blogger plus all the PageRank-boosting “votes” created by those links — was enough to beat the most dark-arts-savvy SEO consultants in the world.

A lot of bloggers saw it as a sweet victory. See, building up a reputation for doing good work online gets rewarded, as it should be! If Google is going to determine the single best result for a search, a bunch of spammy keyword-stuffed SEO tricks should lose out to an established writer, a known entity.

Meanwhile, a lot of SEO guys thought Anil’s win was unfair. The idea of the contest was to see whose SEO skills were the strongest, they argued, not who runs a popular-enough blog to game the results. Anil was taking advantage of his blogosphere fame, coasting on reputation without putting in the work. They saw it as high school: the popular crowd picking on the nerds.

That was the first time I ever encountered a phenomenon that, a year ago, was finally given a name: a data void. Microsoft’s Michael Golebiewski coined the term “to describe search engine queries that turn up little to no results, especially when the query is rather obscure, or not searched often.” Like, say, “nigritude ultramarine.”

Or, not that long ago, “sandy hook crisis actors.” Or “social justice warrior.” Or “notre dame fire muslims.”

Golebiewski (he works on Bing) and co-author danah boyd came out with a new report this week examining the topic of data voids and the dangers they pose for manipulation by those seeking to spread disinformation. (True fact: For a long time I assumed “data void” was a danah boyd phrase, almost certainly because they rhyme.) And in a certain sense, the issue isn’t that different from that contest back in 2004: When an algorithm has to suddenly determine what’s the best information about something, what signals should it favor? The ones coming from established authorities? Or technical tricks from those trying to manipulate results? And what changes when there are a lot of the latter and not many of the former?

The logic underpinning search engines is akin to a lesson from kindergarten: no question is a bad question. But what happens when innocuous questions produce very bad results for users?

Data voids are one such way that search users can be led into disinformation or manipulated content. These voids occur when obscure search queries have few results associated with them, making them ripe for exploitation by media manipulators with ideological, economic, or political agendas. Search engines aren’t simply grappling with media manipulators using search engine optimization techniques to get their website ranked highly or to get their videos recommended; they’re also struggling with conspiracy theorists, white nationalists, and a range of other extremist groups who see search algorithms as a tool for exposing people to problematic content.

In the report, released by Data & Society, Golebiewski and boyd identify five types of data voids ripe for abuse:

Breaking News: The production of problematic content optimized to terms that are suddenly spiking due to a breaking news situation; these voids will eventually be filled by legitimate news content, but are abused before such content exists.

Strategic New Terms: Manipulators create new terms and build a strategically optimized information ecosystem around them before amplifying those terms into the mainstream, often through news media, in order to introduce newcomers to problematic content and frames.

Outdated Terms: When terms go out of date, content creators stop producing content associated with these terms long before searchers stop seeking out content. This creates an opening for manipulators to produce content that exploits search engines’ dependence on freshness.

Fragmented Concepts: By breaking connections between related ideas and creating distinct clusters of information that refer to different political frames, manipulators can segment searchers into different information worlds.

Problematic Queries: Search results for disturbing or fraught terms that have historically returned problematic results continue to do so unless high quality content is introduced to contextualize or outrank such problematic content.

For journalists, these are each important to be aware of, but their role in breaking news situations is obviously most direct. Golebiewski and boyd highlight what happened after the 2017 mass shooting in Sutherland Springs, Texas:

Shortly after the announcement of the shooting in Sutherland Springs, a distributed network of people began coordinating on various forums in an effort to shape media coverage and search engine results. They were driven by a political agenda to influence public perception about this shooting. They first targeted Twitter and Reddit, knowing that search engines like Google and Bing elevate content from these sites when no other material is available. To increase the likelihood of visibility of their content on search engines, they tweeted and posted content that includes words and phrases related to the incident in the early moments before higher-authority content (news media) appeared.

At the same time, these manipulators attempted to influence journalists by using a series of “sock puppet” (inauthentic) accounts on Twitter to ask journalists about the shooter or drop hints to send journalists in the wrong direction. In this case, they tried to encourage journalists to consider whether the shooter might be associated with left-leaning groups by asking questions or pointing to misleading social media posts. While their primary goal was to influence news coverage, this tactic also helps waste journalists’ time.

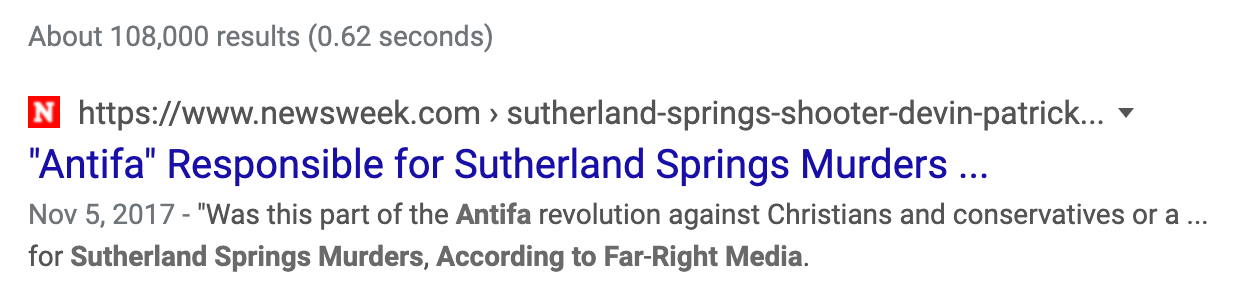

This coordinated activity was noticed by a Newsweek journalist who wrote a story about it (good!), which was then published with a headline that made things worse (bad!): “‘ANTIFA’ RESPONSIBLE FOR SUTHERLAND SPRINGS MURDERS, ACCORDING TO FAR-RIGHT MEDIA.”

Hopefully you can see the problems here. For one, it doesn’t correct the misinformation — it literally repeats it. Presumably, the headline writer thought the “according to far-right media” was enough to indicate that this information was not to be trusted. But not all readers got that — especially ones that might only glance at the headline while scrolling through social media. And, needless to say, if you’re conservative, hearing that “far-right media” is reporting something will likely increase its trustworthiness in your eyes.

For another, tech platforms often truncate long headlines, so even those weak words at the end were often chopped off. Here’s what that headline looks like in a Google search, to this day:

Yikes.

Interestingly, the story’s headline for social wasn’t as awkwardly structured — “Alex Jones claims the Texas church shooting was ‘part of the Antifa revolution against Christians'” — so things weren’t as bad on Twitter:

But other sites that syndicated the story, like Yahoo, didn’t get the metadata memo:

The language journalists use matters. And that’s true even if you’re better about framing your headline than Newsweek was. Golebiewski and boyd note that a significant moment in the rise of the “crisis actors” conspiracy theory after the Parkland school shooting was when CNN’s Anderson Cooper interviewed student David Hogg about the conspiracy theory.

This was intended to allow Hogg to deny the conspiracy theory, but it ended up breathing life into it. More news outlets — and news comedy shows — started using the term. And the more that the term was used in the media, the more people searched for it.

That’s how you end up with a Google Trends spike like this one:

yeah the google trends profile is pretty telling

little bump in Oct 2017 was the Las Vegas shooting, now it's grown up into the go-to catchphrase for whinging pic.twitter.com/fsLM7PhUzL

— Dr Sarah Taber (@SarahTaber_bww) February 24, 2018

But the Catch-22 is that the initial absence of legitimate news stories debunking the theory also gave it fuel:

When they searched, they found the conspiratorial content that had been staged over multiple years. Even though there was some content designed to debunk the conspiracy, conspiratorial content was highly ranked in web searches and in searches on platforms because it had been there for years and because the network of content surrounding it was highly optimized for search engines and recommender systems. With no major news coverage or other authentic sources using this term, the conspiratorial content came up first in nearly every search context until debunking videos started overtaking the results. But even debunking videos helped spread this particular conspiracy.

As of August 2019, searches for information about parents whose children were murdered in the Sandy Hook shooting returned conspiratorial content; the top hit for “Robbie Parker” on YouTube offers a video that claims he’s a crisis actor because he smiled at one point. The comments are filled with conspiratorial narratives. In response to all that has unfolded, some parents whose children were murdered have sued the most well-known conspiracy theorist; at least one parent died, in part because of the harassment experienced after their tragic loss.

That frustrating pattern sets you up pretty well for the authors’ conclusion: There’s no easy way to fix the problem of data voids.

While Bing and Google can — and must — work to identify and remedy data voids, many of the vulnerabilities lie at the heart of what these search engines do. Bing and Google do not produce new websites; they bring to the surface content that other people produce and publish elsewhere on third party platforms. Without new content being created, there are certain data voids that cannot be easily cleaned up. The type of data void also matters: Search engines are able to address issues with problematic queries and forgotten terms much more easily than strategic terms or breaking news. Fragmented concepts raise a myriad of more challenging questions for search companies…

Factually inaccurate information, hyper-partisan content, scams, conspiracy theories, hate speech, and other forms of problematic content are harmful to individuals and societies, but media manipulators have a higher incentive to create such content than those who seek to combat it. Search engine creators want to provide high quality, relevant, informative, and useful information to their users, but they face an arms race with media manipulators. While this report focuses on the dynamics occurring in English on these sites, these problems are likely to be of even greater concern in non-English settings where there is even less data…

How can search engines more effectively detect vulnerabilities in search? Who is going to provide viable content for search engine users seeking information where the current quality of content ranges from mediocre to atrocious? What role should search engines play when someone searches for a data void? And who is responsible for addressing the vulnerabilities at the intersection of different websites, services, and user practices? Even as technology companies increasingly seek solutions to this challenge, the practices of media manipulators reveal that this is not a problem to “solve.” Instead, data voids are a security vulnerability that must be systematically, intentionally, and thoughtfully managed.

There’s lots more interesting stuff in the paper, including a section on manipulating recommendation engines (*cough* YouTube) that I didn’t touch on here. It’s all worth a read. Not least because these behaviors can have impact for a looooong time. Fifteen years later, Anil Dash’s post is still the No. 1 result in Google for “nigritude ultramarine.”