The growing stream of reporting on and data about fake news, misinformation, partisan content, and news literacy is hard to keep up with. This weekly roundup offers the highlights of what you might have missed.

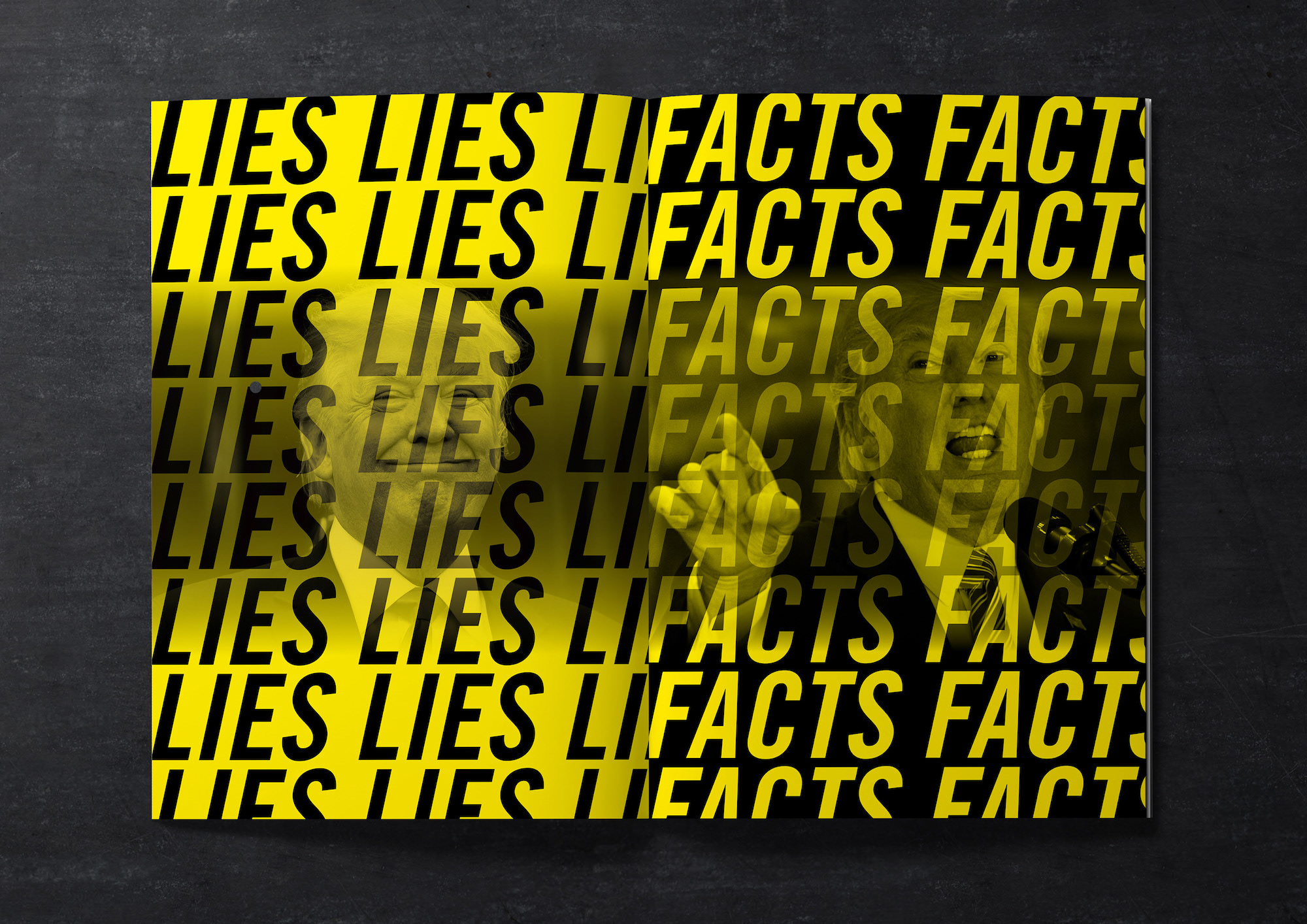

“Twitter feeds of major news outlets are increasingly disputing Trump’s misinformation.” That’s the finding of a new study from progressive nonprofit Media Matters, which had found earlier this year that major news outlets’ Twitter accounts were amplifying Trump’s false claims about an average of 19 times a day — quoting them without context, for instance. Now, in a second sample of “roughly 2,000 tweets about Trump comments sent by 32 Twitter feeds controlled by major news outlets between July 14 and August 3,” Media Matters notes improvement:

Of the roughly 2,000 tweets we reviewed, 653 tweets (33 percent) referenced a false or misleading statement:

— 50% of the time, the outlets’ Twitter accounts disputed the misinformation. This is an improvement from our first study, when they did so only 35 percent of the time.

— Outlets amplified false or misleading Trump claims without disputing them 325 times over the three weeks of the new study.

— On average, outlets amplified false or misleading claims without disputing them 15 times a day — a decline of 21 percent from our first study, when they promoted Trump misinformation an average of 19 times per day.

— 22 of the 32 news outlet Twitter feeds we reviewed improved at disputing Trump’s misinformation in tweets compared to the first study.

— The extent to which outlets’ Twitter feeds passively spread Trump’s misinformation depended on where Trump made his comments. For example: 60 percent of Trump’s falsehoods were disputed when the misinformation came during an interview. 41 percent of Trump’s falsehoods were disputed when the misinformation came during a press gaggle.

The Hill — recent employer of John Solomon, owned by Trump friend Jimmy Finkelstein — remained the worst offender at passing along Trump’s misinformation, and they’ve actually gotten worse:

@TheHill produced the most passive misinformation of any feed we reviewed, accounting for nearly half — 48% of the total tweets that pushed Trump’s misinformation without disputing it during this study, up from 43% of the total in the first study…

The feed is the biggest source of passive misinformation we reviewed because it generates many more tweets about Trump’s comments than any other outlet — eight times the total of the next most-prolific feed. It frequently tweets Trump quotes without context, and often resends the same tweet over and over again, strengthening the misinformation through repetition.

Overall, “as outlets are still collectively amplifying Trump’s falsehoods half the time, there is still substantial room for additional gains,” Media Matters notes.

“Throwing good information after polluted information isn’t likely to mitigate its toxicity.” In a CJR piece this week, Whitney Phillips, an assistant professor at Syracuse University, writes about the “relative powerlessness of facts” to change people’s minds or behavior:

We reach for facts as our antidote to misinformation, false and misleading stories that are inadvertently spread; or disinformation, false and misleading stories that are deliberately spread; or malinformation, true stories that are spread in order to slander and harm. But the problem plaguing digital media is larger than any of these individually; it’s that the categories overlap, obscuring who shares false information knowingly and who shares it thinking it’s true. With so much whizzing by so quickly, good and bad actors—and good and bad information—become hopelessly jumbled….

Just as throwing good money after bad isn’t likely to save a failing business, throwing good information after polluted information isn’t likely to mitigate its toxicity. When journalists center their stories on apostles of disinformation rather than the downstream effects of their lies; when they focus on individual toxic dump sites rather than the socio-technological conditions that allow the pollution to fester; when they claim an objective view from nowhere rather than consider the effects of their own amplification, they — along with any citizen on social media — can be every bit as damaging as people who actively seek to clog the landscape with filth.

Phillips’ book with communication scholar Ryan Milner, You Are Here: A Field Guide for Navigating Polluted Information, will be published by MIT Press next year. Phillips and Milner’s previous book was The Ambivalent Internet: Mischief, Oddity, and Antagonism Online.

Phillips’ piece is part of a broader CJR issue on disinformation. In another article, Emily Bell notes that in the U.S. and U.K., fact-checking is starting to move outside of newsrooms:

Outside newsrooms, money is pouring in to set up new types of organizations to combat misinformation. There is now a sector of fact-checking philanthropy, fueled by Google, Facebook, and nonprofit foundations. As a result, the Duke count noted, last year forty-one out of forty-seven fact-checking organizations were part of, or affiliated with, a media company; this year, the figure is thirty-nine out of sixty. In other words, the number of fact-checking organizations is growing, but their association with traditional journalism outlets is weakening.

That’s already the case in many other parts of the world, Alexios Mantzarlis mentions.

I have a study pending on what fact-checkers cover (w/ @gravesmatter). So while I understand what @emilybell is saying, I also find it ignores that outside the US and UK much, if not most, fact-checking emerged *outside* of journalism because the press wasn't doing it. pic.twitter.com/ynybRBGSB9

— Alexios (@Mantzarlis) December 4, 2019

A disinformation campaign derails a polio vaccination campaign in Pakistan. The Digital Forensic Research Lab reports on how the Pakistani government canceled its annual polio vaccination drive this year after “social media users on Facebook and Twitter began sharing videos that allegedly depicted children fainting and vomiting after vaccination. Other users posted misleading messages, reporting that hundreds of children had died after receiving their polio drops.” The stories were then picked up by Pakistani news outlets and political parties.

“Da Olas Ghag” (“The voice of the people”), a Facebook page belonging to a regional media outlet, uploaded a series of videos on April 22 and 23 making similar unverified claims [that children had died after receiving the polio vaccine]. The misleading content amplified by the page gained significant traction, with videos titled, “3 children die of Polio in Peshawar,” “Four children from the same house were killed due to polio drops,” and “Who is responsible for Polio vaccine infected children?” all garnering tens of thousands — if not hundreds of thousands — of views.

Another popular video uploaded on the page allegedly depicted a local father who had lost four children after allegedly allowing them to be vaccinated. The video received over 856,000 views, 4,500 likes, and 29,859 shares at the time of analysis. The DFRLab was not able to confirm the identity of the individual and location of the video; a number of media outlets, however, including The Washington Post, reported that all children admitted to the hospital were found to be healthy and released shortly after…

As this crisis evolved, Twitter, Facebook, and YouTube worked with the Pakistani government to remove over 174 anti-vaccination links across their platforms. Additionally, Facebook launched an in-product education tool available in Urdu that provides accredited information on vaccines. In spite of these efforts, the modest resources with which this anti-vaccination campaign was carried out highlighted the vulnerability of countries facing economic deprivation, unequal access to education, and societal opposition to immunization drives on the basis of cultural and religious conviction to similar tactics.

How much progress has Facebook really made in fighting fake news? In Medium’s OneZero, Will Oremus takes a look at what has — and hasn’t — changed since 2016.

The discouraging reality is that Facebook’s fact-checking efforts, however sincere, appear to be overmatched by the dynamics of its platform. To make the News Feed a less misleading information source would require far more than belated debunkings and warning labels. It would require altering the basic structure of a network designed to rapidly disseminate the posts that generate the greatest quantity of quick-twitch reactions. It would require differentiating between more and less reliable information sources — something Facebook has attempted in only the most halfhearted ways, and upon which Zuckerberg recently indicated he has little appetite to expand.

In some ways, Facebook is ahead of other major social platforms when it comes to fact-checking user-posted content on a large scale. It now has some 55 fact-checking partners working in 45 languages, and it continues to develop new tools to detect posts that might contain misinformation. Yet the progress the platform has made appears to be reaching its limits under a CEO who sees his platform as a bulwark of free speech more than of human rights, democracy, or truth. Last week, Facebook’s only Dutch fact-checking partner quit the program in protest of the company’s refusal to fact-check politicians.

Here’s more on that Dutch fact-checking partner, by the way. “What is the point of fighting fake news if you are not allowed to tackle politicians?”