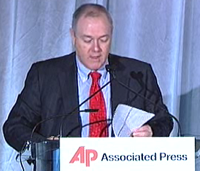

Remember? Two months ago, Associated Press chairman Dean Singleton said his organization would take a firm stand against unlicensed use of its content and that of its members. “We are mad as hell,” he declared at the AP’s annual meeting in San Diego, “and we’re not going to take it any more.”

Remember? Two months ago, Associated Press chairman Dean Singleton said his organization would take a firm stand against unlicensed use of its content and that of its members. “We are mad as hell,” he declared at the AP’s annual meeting in San Diego, “and we’re not going to take it any more.”

Singleton is a newspaper man. His first reporting gig came as a teenager in Graham, Texas, and now he’s in charge of MediaNews Group, the nation’s fourth-largest newspaper company. So, of course, he knew that channeling Howard Beale was certain to find its way into every article and blog post about the speech. That’s why he said it, and that’s how most people learned of the AP’s supposed crackdown on piracy of its work. (Watch the meeting here, or listen to the magic words below.)

[audio:http://www.niemanlab.org/audio/madashell.mp3]

Here’s what followed: Google was said to be a major target of the speech, even though Singleton didn’t mention the company or even the phrase “search engine.” News aggregators were also assumed to be in the crosshairs, although The Huffington Post, like Google, is a paying customer of the AP. Everyone was very angry, and nuance seemed to be lost amid all the saber-rattling. Since then, the AP has done little to clarify whom, exactly, its mad at or how it plans to address that anger.

Shift the tale to New York, three weeks later, at the headquarters of Thomson Reuters, where a slew of major news organizations — but not the AP — gathered to consider a new tact in combatting online piracy. Reuters and Politico were already on board. So was every member of the Magazine Publishers Association.

They proposed banding together as the Fair Syndication Consortium with an innovative approach to combatting the true tapeworms of the online news business: not Google, certainly, or Arianna Huffington, but wholesale copiers of content. The consortium is targeted, in part, at spam blogs — or splogs — that reprint news articles and posts in their entirety alongside cheap advertising. Splogs are typically automated, and the only human being involved is the one who gets a check at the end of the month.

What the consortium seeks to do is turn tapeworms into fungus. They don’t want to shut down splogs and their ilk, which would be a largely sisyphean task of enormous cost. Instead, the consortium is negotiating with the networks that serve ads against pirated content to negotiate a substantial share of that revenue.

What the consortium seeks to do is turn tapeworms into fungus. They don’t want to shut down splogs and their ilk, which would be a largely sisyphean task of enormous cost. Instead, the consortium is negotiating with the networks that serve ads against pirated content to negotiate a substantial share of that revenue.

Abusive sites, under this arrangement, could operate with legal cover and might proliferate as a result, so publishers would have to get used to the idea of their content appearing across the web, on servers they don’t control, amid page designs that only exist to sell cheap advertising. But it’s really just a cruder — or, you might say, more organic — form of traditional syndication. I hesitate to overhype this, but the concept, if not this particular application of it, has the potential to fundamentally shift how publishers conceive of distributing their content on the web.

There’s a company behind the consortium: Attributor, which crawls the Internet in search of copied content for a host of media companies (including, incidentally, the AP). At a meeting of newspaper executives in Chicago last week, Attributor CEO Jim Pitkow estimated that publishers are losing a total of $250 million annually to splogs and other sites that copy their content. (In a phone interview yesterday, he told me that estimate was based on a “conservative” CPM of roughly 25 cents, though for reasons we could discuss in the comments, I’m not convinced of their math.)

This is where Google, et al. enter the equation — in the role of partner rather than adversary. Attributor says that 94% of ads on splogs and other sites that pirate content are served by DoubleClick, Yahoo, or Google AdSense. Since Google owns DoubleClick, the consortium’s negotiations can focus on two companies with a strong interest in appearing supportive of intellectual property. But I should really stop explaining this because we obtained the slides that Pitkow presented in Chicago, and they make the consortium’s case pretty clear:

Nearly everyone I’ve spoken to with knowledge of the Chicago meeting, where newspaper companies were pitched on a variety of online business plans, says that Pitkow’s presentation of the Fair Syndication Consortium was by far the most popular. Attributor, which will be taking an undisclosed cut of the revenue, won’t announce who has signed onto the consortium for another few weeks, but expect it to include lots of major newspaper companies and blog networks. One reason the consortium has been so well-received is that publishers are taking on little risk.

“The worst-case scenario is that some publishers don’t make much money from this,” Pitkow told me. The best case? That not only do Google and Yahoo cooperate, opening up a new revenue stream for content producers, but publishers begin actively seeking wide distribution of their work with no compensation except a share of advertising.

The consortium’s negotiations with ad networks are ongoing, though Pitkow, of course, said they’ve been “encouraging.” I expect they’ll succeed, but a sticking point could be the share of revenue, which might vary from publisher to publisher. Pitkow said it could range from 25 to 75 percent, but it may not be his call.

The Associated Press, meanwhile, is not joining the consortium. (Pitkow wouldn’t comment.) Instead, they seem to be striking out on their own with a system that, at least in its outlines, sounds awfully similar to what I’ve described here. The only problem is that, in the meantime, many of AP’s members appear to have defected to the Fair Syndication Consortium.

And if that’s the case, then Singleton is surely mad as hell.