A common way to tackle misinformation, especially health misinformation, is to ignore it. And this is a strategy often employed by authority figures — sidestep the misinformation, don’t give it airtime, and it might just go away.

But the results of a new study suggest that this method of combating health misinformation is less effective than addressing and then debunking misinformation head on. The results were published November 10 in BMJ Global Health.

To conduct the study, researchers at the Karolinska Institute in Sweden recruited more than 730 volunteers in Sierra Leone, and tested them on their knowledge of “typhoid-malaria.” Typhoid and malaria infections can co-occur, but rarely, and a common misconception among the people in the African nation is that they are a joint condition, and that they are caused by the same underlying culprits. (In actuality, typhoid and malaria are very different: Typhoid is caused by bacteria and spread through unsanitary water, but malaria is caused by a parasite that is spread by mosquitoes).

“The diagnostics for typhoid are really poor,” said Maike Winters, a research coordinator at the Karolinska Institute’s Department of Global Health and first author of the new study. “You actually have a higher chance of getting a positive typhoid test when you have malaria, even though when you do a blood culture, it will show negative,” she said. But the tools to do bloodwork are not available in most places, which keeps misinformation about “typhoid malaria” unchecked, Winters added.

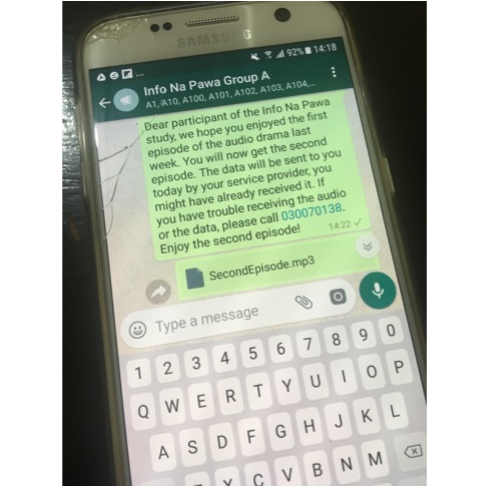

In order to conduct the experiment, the researchers relied on the social messaging tool WhatsApp.

Beyond being scalable beyond this experiment, “[WhatsApp] is an all encompassing social media platform in many African countries,” Winters said. “And we figured it would be a great way to spread the message because that way, you could also check if people actually have seen the message” (because WhatsApp shows two blue check marks if a message has been read).

One group of volunteers was assigned to the intervention that would acknowledge and then debunk misinformation about typhoid malaria, while another group was only presented facts about “typhoid-malaria.” At the start of the experiment, roughly half the study participants incorrectly believed that typhoid was caused by mosquitoes, and nearly 60% falsely believed that typhoid and malaria always co-occur.

A screenshot of what the study participants received each week in order to listen to the audio dramas about typhoid-malaria.

The researchers delivered information about the diseases through audio dramas, which are popular ways of communicating information in Sierra Leone. A well-known actors’ group in the city of Freetown — known as the Freetong Players — was recruited to read narrative scripts about patients infected with typhoid and malaria — some included plot thickeners such as patients needing to get better in order to attend a family member’s wedding. Study volunteers listed to four episodes of this drama over the course of four weeks (one episode per week), and were tested on their knowledge of “typhoid-malaria” and retention of misinformation at the start and end of the four weeks.

In the group that included confronting misinformation, the audio drama plot included a nurse or other hospital worker overhearing the patient family’s false beliefs about “typhoid-malaria,” confronting them about it and then correcting the information. In this example, a male nurse says to a patient’s family that the patient has “typhoid-malaria,” and upon hearing this, the head nurse comes in and swiftly says, “There is nothing like typhoid-malaria,” adding, “Typhoid is typhoid and malaria is malaria” and also saying, “These are two different diseases. They might have similar symptoms but they are two different diseases.”

In the other group, the plot included families only being presented with facts about typhoid, with no mention of malaria even when a patient’s family member suspects a second disease. The health workers are laser focused on only tackling the symptoms at hand, which they say are consistent with typhoid, and there is no discussion of other possibilities, including “typhoid-malaria.”

There was also a third control group, which was presented information related to neither typhoid nor malaria in order to keep from influencing their beliefs.

When participants were quizzed about their knowledge of typhoid and malaria after the four-week experiment, both types of intervention significantly reduced the misinformation about the diseases, compared to the control group (which was only presented information about breastfeeding, so as not to influence their beliefs on the two infectious diseases in question).

At the same time, the method of confronting misinformation head on and then debunking it, versus ignoring misinformation, was slightly more effective, both when it came to knowing that typhoid isn’t caused by malaria and when it came to understanding that typhoid and malaria don’t always co-occur.

This was surprising, Winters said, “especially if you look at the way authorities communicate during the pandemic.” Health and other authorities usually tend to stick to the facts and sidestep any factual inaccuracies.

Importantly, researchers didn’t also see a backfiring effect: Exposure to misinformation in the group that specifically tackled it didn’t increase people’s belief in that information.

Why did this approach seem to work? Winters thinks there are three main components to explain this success. Two of them involve the use of trusted sources, both in the form of the well-known actors’ group and also in the inclusion of health worker characters in the dramas. The third component is the repetition of the messages, in the form of the episodic format of the dramas. Hearing accurate information repeated helped reinforce the ideas, Winters said.

“Don’t be afraid to tackle misinformation head on,” Winters asserted. “I think it’s important that people speak out, and you can repeat [misinformation] and then debunk it.”

Moreover, debunking misinformation takes work, in the form of identifying trusted sources and knowing a community of people well. “Trusted sources who are often in the media are not always the trusted sources for a given set of people,” Winters said. “So I think we have to really do our research to figure out why people believe in something, who they trust and how we can then reach people. It requires quite a bit of effort.”