The growing stream of reporting on and data about fake news, misinformation, partisan content, and news literacy is hard to keep up with. This weekly roundup offers the highlights of what you might have missed.

Fake news writers get LONG obituaries now. Last week, disinfo bros; this week, Paul Horner, who appears to have OD’d on prescription drugs. Here’s The New York Times, The Arizona Republic (double byline, multiple sections including a close look at his charity to hand out socks to homeless people), NPR, CBS, NBC, BuzzFeed News, Gizmodo. The Washington Post has a slightly different take: “Who do you believe when a famous Internet hoaxer is said to be dead?”

Why is “was a jackass online” justification for so many extensive obituaries? Horner’s quote to The Washington Post last November, “I think Trump is in the White House because of me,” was repeated in each obituary I found, despite the fact that there is zero evidence to back that claim (and it would be impossible to prove anyway). Each is illustrated with the same CNN screenshot.

David Uberti writes at Splinter:

The undercurrent flowing through such stories — you can also read this between the lines of coverage of Milo, Breitbart, and the like — is that these figures are messengers of the new-media counterculture. Edgy, even.

My counterargument: They are bad people doing bad things, and they represent a cancer within the manic-depressive media environment we all inhabit. If the journalists who write about these people can’t make their own moral judgments about how terrible they are for all the rest of us, we’re in even more trouble than we think.

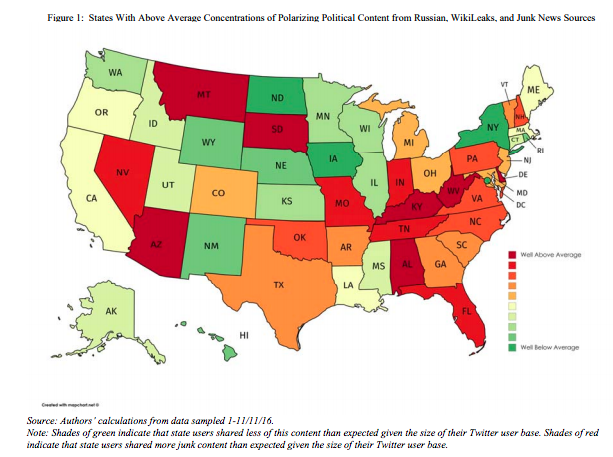

There was more fake news in swing states (and a lot of fake news on Twitter). Researchers from Oxford’s Computational Propaganda Project looked at the location data of political news being shared on Twitter in the 10-day period around the 2016 election. They started out looking at 22,117,221 “tweets collected between November 1-11, 2016, that contained hashtags related to politics and the election in the U.S.” (The Computational Propaganda Project’s previous work has also looked at tweets that include hashtags.) About a third of those contained enough location information to determine the state the user was in. “Many of the swing states getting highly concentrated doses of polarizing content were also among those with large numbers of votes in the Electoral College,” they write.

Of the tweets being shared: “20% of all the links being shared with election-related hashtags came from professional news organizations.”

The number of links to professionally produced content is less than the number of links to polarizing and conspiratorial junk news. In other words, the number of links to Russian news stories, unverified or irrelevant links to WikiLeaks pages, or junk news was greater than the number of links to professional researched and published news. Indeed, the proportion of misinformation was twice that of the content from experts and the candidates themselves.

Second, a worryingly large proportion of all the successfully catalogued content provides links to polarizing content from Russian, WikiLeaks, and junk news sources. This content uses divisive and inflammatory rhetoric, and presents faulty reasoning or misleading information to manipulate the reader’s understanding of public issues and feed conspiracy theories. Thus, when links to Russian content and unverified WikiLeaks stories are added to the volume of junk news, fully 32% of all the successfully catalogued political content was polarizing, conspiracy driven, and of an untrustworthy provenance.

The New York Times’ Daisuke Wakabayashi and Scott Shane reported this week that Twitter will testify before Congress about its role in the election. The article includes information from the Alliance for Securing Democracy, which is housed out of the German Marshall Fund of the United States in D.C. The Times notes that researchers at the Alliance for Securing Democracy:

…have been publicly tracking 600 Twitter accounts — human users and suspected bots alike — they have linked to Russian influence operations. Those were the accounts pushing the opposing messages on the N.F.L. and the national anthem.

Of 80 news stories promoted last week by those accounts, more than 25 percent ‘had a primary theme of anti-Americanism,’ the researchers found. About 15 percent were critical of Hillary Clinton, falsely accusing her of funding left-wing antifa — short for anti-fascist — protesters, tying her to the lethal terrorist attack in Benghazi, Libya, in 2012 and discussing her daughter Chelsea’s use of Twitter. Eleven percent focused on wiretapping in the federal investigation into Paul Manafort, President Trump’s former campaign chairman, with most of them treated the news as a vindication for President Trump’s earlier wiretapping claims.

“What we see over and over again is that a lot of the messaging isn’t about politics, a specific politician, or political parties,” Laura Rosenberger, the director of the Alliance for Securing Democracy, told the Times. “It’s about creating societal division, identifying divisive issues and fanning the flames.”

Mark Zuckerberg and “both sides.” President Barack Obama spoke with Facebook CEO Mark Zuckerberg a couple months before the election and “made a personal appeal to Zuckerberg to take the threat of fake news and political disinformation seriously,” The Washington Post’s Adam Entous, Elizabeth Dwoskin, and Craig Timberg reported. On Wednesday, Zuckerberg wrote in a Facebook post: “After the election, I made a comment that I thought the idea misinformation on Facebook changed the outcome of the election was a crazy idea. Calling that crazy was dismissive and I regret it. This is too important an issue to be dismissive.”

This is very "I'm sorry if YOU were offended…" https://t.co/3bq3Vea9JE pic.twitter.com/WjNcPYwPqY

— Katie Notopoulos (@katienotopoulos) September 27, 2017

But he also framed it as an issue with two sides: “Trump says Facebook is against him. Liberals say we helped Trump. Both sides are upset about ideas and content they don’t like. That’s what running a platform for all ideas looks like.”

Umm, this is starting to sound a little disinfobro-ish. (“We’re all now questioning reality as it’s being handed down.”)

1.If you don't like viral misinfo, fake news & Russian ads—you're just uncomfortable with "ideas". 2.This is what getting played looks like. pic.twitter.com/nrOLJ1krvG

— Zeynep Tufekci (@zeynep) September 28, 2017

Read her whole thread, but…

BS "both sides" arguments and getting played and caving when someone flicks a finger: congrats. FB arrives as a traditional media company.

— Zeynep Tufekci (@zeynep) September 28, 2017